Antibody-antigen modelling tutorial using a local version of HADDOCK3

This tutorial consists of the following sections:

- Introduction

- HADDOCK general concepts

- A brief introduction to HADDOCK3

- Software and data setup

- Preparing PDB files for docking

- Defining restraints for docking

- Setting up and running the docking with HADDOCK3

- HADDOCK3 workflow definition

- Running HADDOCK3

- Execution of HADDOCK3 on the computers of the BioExcel 2025 summerschool

- Execution of HADDOCK3 on DISCOVERER (BioExcel Sofia May 2025 workshop)

- Execution of HADDOCK3 on the TRUBA resources (EuroCC Istanbul April 2024 workshop)

- Execution of HADDOCK3 on Fugaku (ASM 2026 HPC/AI school, Kobe Japan)

- Execution of HADDOCK3 on ADD Ljubljana (BioExcel Adriatic edition 2026, Ljubljana, Slovenia)

- Learn more about the various execution modes of haddock3

- Analysis of docking results

- Conclusions

- BONUS 1: Dissecting the interface energetics: what is the impact of a single mutation?

- BONUS 2: Does the antibody bind to a known interface of interleukin? ARCTIC-3D analysis

- BONUS 3: How good are AI-based models of antibody for docking?

- BONUS 4: Ensemble docking using a combination of exprimental and AI-predicted antibody structures

- BONUS 5: Antibody-antigen complex structure prediction from sequence using AlphaFold2

- BONUS 6: Running a scoring scenario

- Congratulations! 🎉

Introduction

This tutorial demonstrates the use of the new modular HADDOCK3 version for predicting the structure of an antibody-antigen complex using knowledge of the hypervariable loops on the antibody (i.e., the most basic knowledge) and epitope information identified from NMR experiments for the antigen to guide the docking.

This tutorial was recorded at the BioExcel Sofia Workshop in May 2025:

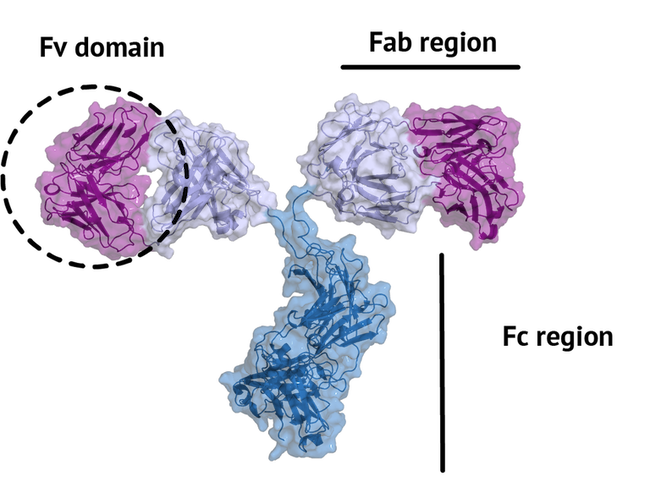

An antibody is a large protein that generally works by attaching itself to an antigen,

which is a unique site of the pathogen. The binding harnesses the immune system to directly

attack and destroy the pathogen. Antibodies can be highly specific while showing low immunogenicity (i.e. the ability to provoke an immune response),

which is achieved by their unique structure. The fragment crystallizable region (Fc region)

activates the immune response and is species-specific, i.e. the human Fc region should not

induce an immune response in humans. The fragment antigen-binding region (Fab region)

needs to be highly variable to be able to bind to antigens of various nature (high specificity).

In this tutorial, we will concentrate on the terminal variable domain (Fv) of the Fab region.

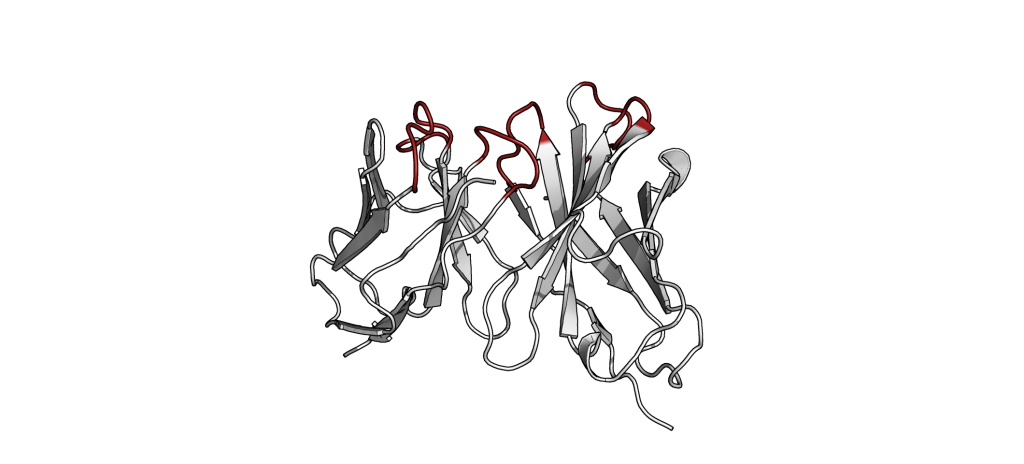

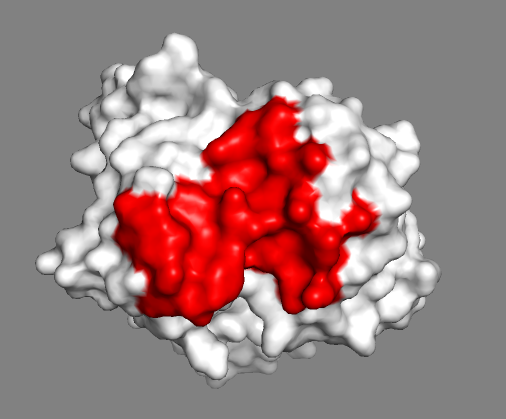

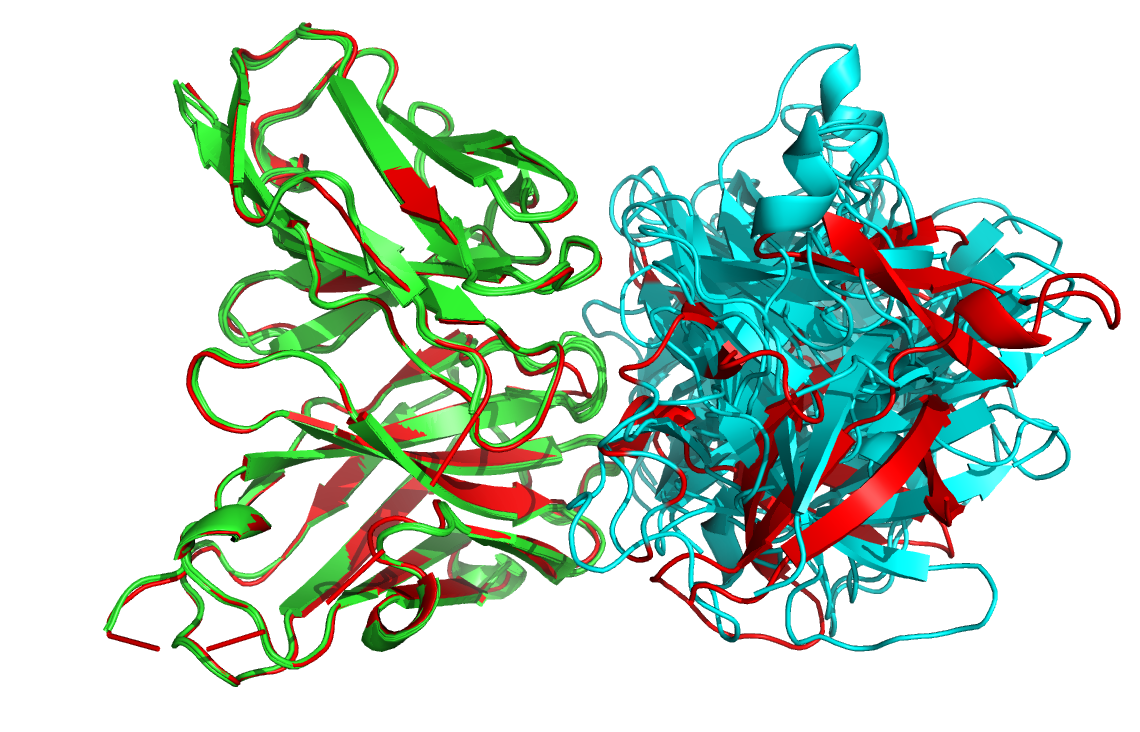

The small part of the Fab region that binds the antigen is called paratope. The part of the antigen that binds to an antibody is called epitope. The paratope consists of six highly flexible loops, known as complementarity-determining regions (CDRs) or hypervariable loops whose sequence and conformation are altered to bind to different antigens. CDRs are shown in red in the figure below:

In this tutorial we will be working with Interleukin-1β (IL-1β) (PDB code 4I1B) as an antigen and its highly specific monoclonal antibody gevokizumab (PDB code 4G6K) (PDB code of the complex 4G6M).

Throughout the tutorial, colored text will be used to refer to questions or instructions, and/or PyMOL commands.

This is a question prompt: try answering it! This an instruction prompt: follow it! This is a PyMOL prompt: write this in the PyMOL command line prompt! This is a Linux prompt: insert the commands in the terminal!

HADDOCK general concepts

HADDOCK (see https://www.bonvinlab.org/software/haddock2.4) is a collection of python scripts derived from ARIA (https://aria.pasteur.fr) that harness the power of CNS (Crystallography and NMR System – https://cns-online.org) for structure calculation of molecular complexes. What distinguishes HADDOCK from other docking software is its ability, inherited from CNS, to incorporate experimental data as restraints and use these to guide the docking process alongside traditional energetics and shape complementarity. Moreover, the intimate coupling with CNS endows HADDOCK with the ability to actually produce models of sufficient quality to be archived in the Protein Data Bank.

A central aspect of HADDOCK is the definition of Ambiguous Interaction Restraints or AIRs. These allow the translation of raw data such as NMR chemical shift perturbation or mutagenesis experiments into distance restraints that are incorporated into the energy function used in the calculations. AIRs are defined through a list of residues that fall under two categories: active and passive. Generally, active residues are those of central importance for the interaction, such as residues whose knockouts abolish the interaction or those where the chemical shift perturbation is higher. Throughout the simulation, these active residues are restrained to be part of the interface, if possible, otherwise incurring a scoring penalty. Passive residues are those that contribute to the interaction but are deemed of less importance. If such a residue does not belong in the interface there is no scoring penalty. Hence, a careful selection of which residues are active and which are passive is critical for the success of the docking.

A brief introduction to HADDOCK3

HADDOCK3 is the next generation integrative modelling software in the long-lasting HADDOCK project. It represents a complete rethinking and rewriting of the HADDOCK2.X series, implementing a new way to interact with HADDOCK and offering new features to users who can now define custom workflows.

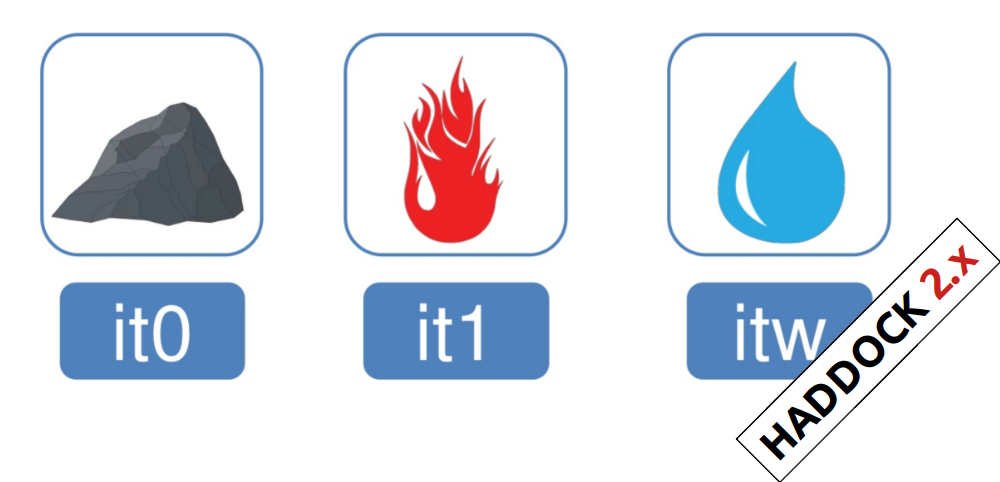

In the previous HADDOCK2.x versions, users had access to a highly

parameterisable yet rigid simulation pipeline composed of three steps:

rigid-body docking (it0), semi-flexible refinement (it1), and final refinement (itw).

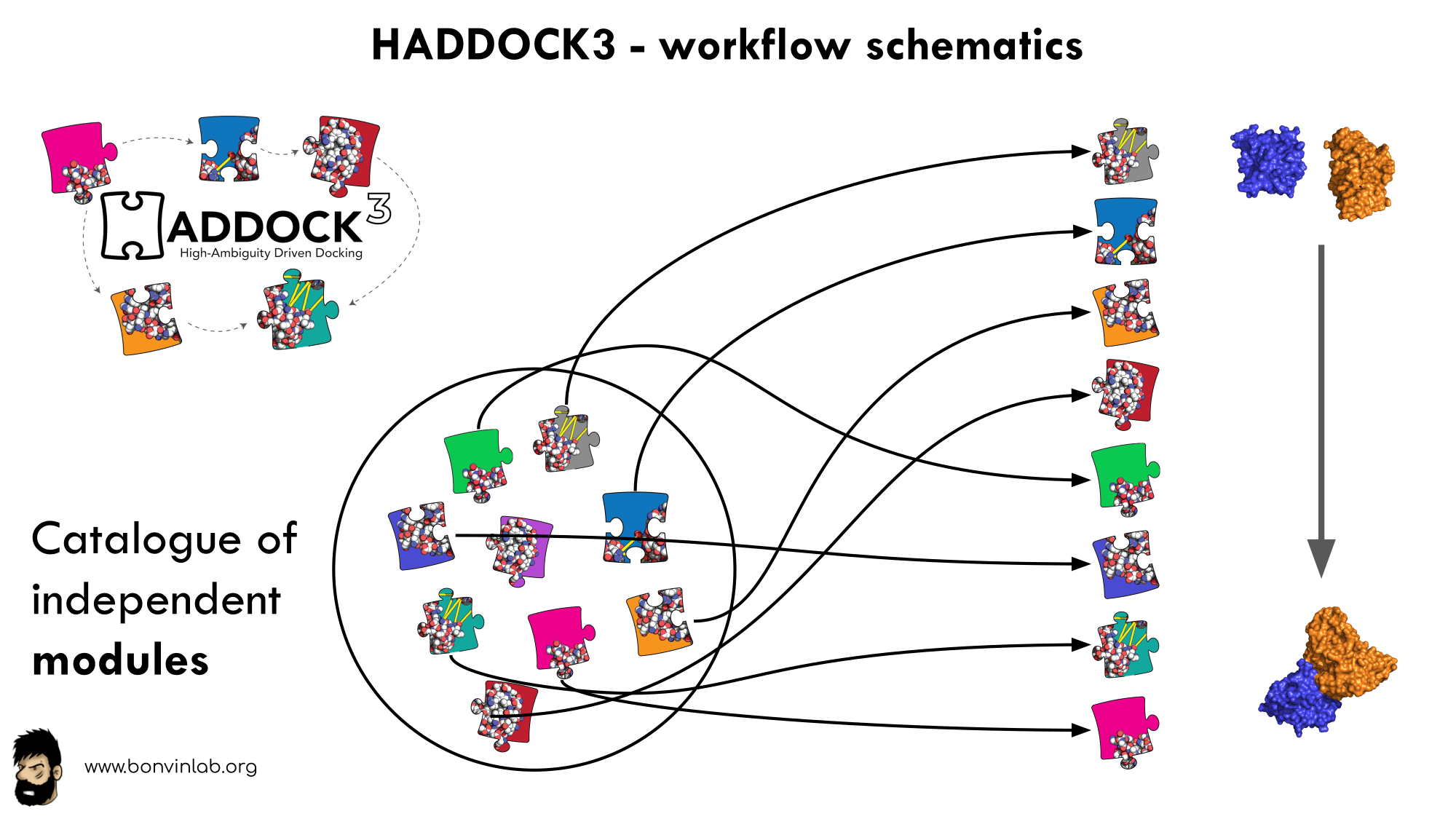

In HADDOCK3, users have the freedom to configure docking workflows into

functional pipelines by combining the different HADDOCK3 modules, thus

adapting the workflows to their projects. HADDOCK3 has therefore developed to

truthfully work like a puzzle of many pieces (simulation modules) that users can

combine freely. To this end, the “old” HADDOCK machinery has been modularized,

and several new modules added, including third-party software additions. As a

result, the modularization achieved in HADDOCK3 allows users to duplicate steps

within one workflow (e.g., to repeat twice the it1 stage of the HADDOCK2.x

rigid workflow).

Note that, for simplification purposes, at this time, not all functionalities of HADDOCK2.x have been ported to HADDOCK3, which does not (yet) support NMR RDC, PCS and diffusion anisotropy restraints, cryo-EM restraints and coarse-graining. Any type of information that can be converted into ambiguous interaction restraints can, however, be used in HADDOCK3, which also supports the ab initio docking modes of HADDOCK.

To keep HADDOCK3 modules organized, we catalogued them into several categories. However, there are no constraints on piping modules of different categories.

The main module categories are “topology”, “sampling”, “refinement”, “scoring”, and “analysis”. There is no limit to how many modules can belong to a category. Modules are added as developed, and new categories will be created if/when needed. You can access the HADDOCK3 documentation page for the list of all categories and modules. Below is a summary of the available modules:

- Topology modules

topoaa: generates the all-atom topologies for the CNS engine.

- Sampling modules

rigidbody: Rigid body energy minimization with CNS (it0in haddock2.x).lightdock: Third-party glow-worm swam optimization docking software.

- Model refinement modules

flexref: Semi-flexible refinement using a simulated annealing protocol through molecular dynamics simulations in torsion angle space (it1in haddock2.x).emref: Refinement by energy minimisation (itwEM only in haddock2.4).mdref: Refinement by a short molecular dynamics simulation in explicit solvent (itwin haddock2.X).

- Scoring modules

emscoring: scoring of a complex performing a short EM (builds the topology and all missing atoms).mdscoring: scoring of a complex performing a short MD in explicit solvent + EM (builds the topology and all missing atoms).

- Analysis modules

alascan: Performs a systematic (or user-define) alanine scanning mutagenesis of interface residues.caprieval: Calculates CAPRI metrics (i-RMSD, l-RMSD, Fnat, DockQ) with respect to the top-scoring model or reference structure if provided.clustfcc: Clusters models based on the fraction of common contacts (FCC)clustrmsd: Clusters models based on pairwise RMSD matrix calculated with thermsdmatrixmodule.contactmap: Generate contact matrices of both intra- and intermolecular contacts and a chordchart of intermolecular contacts.rmsdmatrix: Calculates the pairwise RMSD matrix between all the models generated in the previous step.ilrmsdmatrix: Calculates the pairwise interface-ligand-RMSD (il-RMSD) matrix between all the models generated in the previous step.seletop: Selects the top N models from the previous step.seletopclusts: Selects the top N clusters from the previous step.

The HADDOCK3 workflows are defined in simple configuration text files, similar to the TOML format but with extra features. Contrary to HADDOCK2.X which follows a rigid (yet highly parameterisable) procedure, in HADDOCK3, you can create your own simulation workflows by combining a multitude of independent modules that perform specialized tasks.

Software and data setup

In order to follow this tutorial you will need to work on a Linux or MacOSX system. We will also make use of PyMOL (freely available for most operating systems) in order to visualize the input and output data. We will provide you links to download the various required software and data.

Further, we are providing pre-processed PDB files for docking and analysis (but the preprocessing of those files will also be explained in this tutorial). The files have been processed to facilitate their use in HADDOCK and to allow comparison with the known reference structure of the complex.

If you are running this tutorial on your own resources download and unzip the following zip archive and note the location of the extracted PDB files in your system.

If running as part of the ASM HPC/AI school or a BioExcel workshop or summerschool see the instructions in the respective next sections.

Note that you can also download and unzip this archive directly from the Linux command line:

Unziping the file will create the HADDOCK3-antibody-antigen directory which should contain the following directories and files:

pdbs: a directory containing the pre-processed PDB filesrestraints: a directory containing the interface information and the corresponding restraint files for HADDOCK3runs: a directory containing pre-calculated resultsscripts: a directory containing various scripts used in this tutorialworkflows: a directory containing configuration file examples for HADDOCK3

In case of a workshop of course, HADDOCK3 will usually have been installed on the system you will be using.

In case HADDOCK3 is not pre-installed in your system, you will have to install it. To obtain HADDOCK3, fill the registration form, and then follow the installation instructions.

In this tutorial we will use the PyMOL molecular visualisation system. If not already installed, download and install PyMOL from here. You can use your favourite visualisation software instead, but be aware that instructions in this tutorial are provided only for PyMOL.

This tutorial was last tested using HADDOCK3 version 2024.10.0b7. The provided pre-calculated runs were obtained on a Macbook Pro M2 processors with as OS Sequoia 15.3.1.

BioExcel Adriatic edition 2026, Ljubljana, Slovenia

You can either:

- make use of the ADD HPC system for this tutorial, working at the command line,

- or start a Colab notebook (provided you have Google credentials) and follow the instructions in that notebook (simpler).

If running on HPC system, the software and data required for this tutorial have been pre-installed. Please connect to the HPC system using your credentials either via ssh connection.

In order to run the tutorial, go into you data directory, then copy and unzip the required data:

unzip /home/vreys/HADDOCK3-antibody-antigen.zip

This will create the HADDOCK3-antibody-antigen directory with all necessary data and scripts and job examples ready for submission to the batch system.

HADDOCK3 has been pre-installed on the compute nodes. To test the installation, first create an interactive session on a node with:

salloc –job-name=interactive_haddock3 –partition=amd –nodes=1 –cpus-per-task=8 –time-min=120

Once the session is active, activate HADDOCK3 with:

source /home/vreys/haddock3/.haddock3-env/bin/activate

You can then test that haddock3 is indeed accessible with:

You should see a small help message explaining how to use the software.

View outputexpand_more

(haddock3)$ haddock3 -h

usage: haddock3 [-h] [--restart RESTART] [--extend-run EXTEND_RUN] [--setup]

[--log-level {DEBUG,INFO,WARNING,ERROR,CRITICAL}] [-v]

recipe

positional arguments:

recipe The input recipe file path

optional arguments:

-h, --help show this help message and exit

--restart RESTART Restart the run from a given step. Previous folders from the

selected step onward will be deleted.

--extend-run EXTEND_RUN

Start a run from a run directory previously prepared with the

`haddock3-copy` CLI. Provide the run directory created with

`haddock3-copy` CLI.

--setup Only setup the run, do not execute

--log-level {DEBUG,INFO,WARNING,ERROR,CRITICAL}

-v, --version show version

ASM 2026 HPC/AI school, Kobe, Japan, February 2026

click to expand

You can either: * make use of the Fugaku supercomputer for this tutorial, working at the command line, * or [start a Colab notebook](https://colab.research.google.com/github/haddocking/haddock3/blob/main/notebooks/HADDOCK3-antibody-antigen.ipynb){:target="_blank"} (provided you have Google credentials) and follow the instructions in that notebook (simpler). If running on Fugaku, the software and data required for this tutorial have been pre-installed. Please connect to Fugaku using your credentials either via ssh connection or from a web browser using OnDemand: [https://ondemand.fugaku.r-ccs.riken.jp/](https://ondemand.fugaku.r-ccs.riken.jp/){:target="_blank"} If using OnDemand, open then a terminal session, requiring one node and 48 processes and change the working directory to your directory under _/vol0300/data/hp250477/Students/..._. In order to run the tutorial, go into you data directory, then copy and unzip the required data: unzip /vol0300/data/hp250477/Materials/Life_Science/20260202-HADDOCK/HADDOCK3-antibody-antigen.zip This will create the `HADDOCK3-antibody-antigen` directory with all necessary data and scripts and job examples ready for submission to the batch system. HADDOCK3 has been pre-installed on the compute nodes. To test the installation, first create an interactive session on a node with: pjsub \-\-interact \-L \"node=1\" \-L \"rscgrp=int\" \-L \"elapse=2:00:00\" \-\-sparam \"wait-time=600\" \-g hp250477 \-x PJM_LLIO_GFSCACHE=/vol0003:/vol0004 Once the session is active, activate HADDOCK3 with: source /vol0300/data/hp250477/Materials/Life_Science/20260202-HADDOCK/haddock3/.venv/bin/activateYou can then test that `haddock3` is indeed accessible with: haddock3 -h You should see a small help message explaining how to use the software.

View outputexpand_more

(haddock3)$ haddock3 -h

usage: haddock3 [-h] [--restart RESTART] [--extend-run EXTEND_RUN] [--setup]

[--log-level {DEBUG,INFO,WARNING,ERROR,CRITICAL}] [-v]

recipe

positional arguments:

recipe The input recipe file path

optional arguments:

-h, --help show this help message and exit

--restart RESTART Restart the run from a given step. Previous folders from the

selected step onward will be deleted.

--extend-run EXTEND_RUN

Start a run from a run directory previously prepared with the

`haddock3-copy` CLI. Provide the run directory created with

`haddock3-copy` CLI.

--setup Only setup the run, do not execute

--log-level {DEBUG,INFO,WARNING,ERROR,CRITICAL}

-v, --version show version

BioExcel summerschool, Pula, Sardinia June 2025

click to expand

We will be making use of the local computers for this tutorial. The software and data required for this tutorial have been pre-installed.

In order to run the tutorial, go into the

HADDOCK3-antibody-antigen directory and activate the HADDOCK3 environment:

This directory contains all necessary data and scripts to run this tutorial. To activate the HADDOCK3 environment type:

haddock3env

which is an alias for:

source ~/BioExcel_SS_2025/HADDOCK/haddock3/.venv/bin/activate

You can then test that haddock3 is accessible with:

View outputexpand_more

(haddock3)$ haddock3 -h

usage: haddock3 [-h] [--restart RESTART] [--extend-run EXTEND_RUN] [--setup]

[--log-level {DEBUG,INFO,WARNING,ERROR,CRITICAL}] [-v]

recipe

positional arguments:

recipe The input recipe file path

optional arguments:

-h, --help show this help message and exit

--restart RESTART Restart the run from a given step. Previous folders from the

selected step onward will be deleted.

--extend-run EXTEND_RUN

Start a run from a run directory previously prepared with the

`haddock3-copy` CLI. Provide the run directory created with

`haddock3-copy` CLI.

--setup Only setup the run, do not execute

--log-level {DEBUG,INFO,WARNING,ERROR,CRITICAL}

-v, --version show version

Local setup (on your own)

click to expand

If you are installing HADDOCK3 on your own system, check the instructions and requirements below.

Installing HADDOCK3

To obtain HADDOCK3, fill the registration form , and then follow the installation instructions .

Note that depending on the system you are installing HADDOCK3 on, you might have to recompile CNS if the provided executable is not working. See the CNS troubleshooting section on the HADDOCK3 GitHub repository for instructions.

Auxiliary software

PyMOL: In this tutorial we will make use of PyMOL for visualization. If not already installed on your system, download and install PyMOL. Note that you can use your favorite visualization software, but instructions are only provided here for PyMOL.

Preparing PDB files for docking

In this section we will prepare the PDB files of the antibody and antigen for docking. Crystal structures of both the antibody and the antigen in their free forms are available from the PDBe database.

Important: For a docking run with HADDOCK, each molecule should consist of a single chain with non-overlapping residue numbering within the same chain.

As an antibody consists of two chains (L+H), we will have to prepare it for use in HADDOCK. For this we will be making use of pdb-tools from the command line.

Note that pdb-tools is also available as a web service.

Preparing the antibody structure

Using PDB-tools we will download the unbound structure of the antibody from the PDB database (the PDB ID is 4G6K) and then process it to have a unique chain ID (A) and non-overlapping residue numbering by renumbering the merged pdb (starting from 1). For this we will concatenate the following PDB-tools commands:

- fetch the PDB entry from the PDB database (

pdb_fetch) - clean the PDB file (

pdb_tidy) - select the chain (

pdb_selchain), - remove any hetero atoms from the structure (e.g. crystal waters, small molecules from the crystallisation buffer and such) (

pdb_delhetatm), - fix residue numbering insertion in the HV loops (often occuring in antibodies - see note below) (

pdb_fixinsert) - remove any possible side-chain duplication (can be present in high-resolution crystal structures in case of multiple conformations of some side chains) (

pdb_selaltloc) - keep only the coordinates lines (

pdb_keepcoord), - select only the variable domain (FV) of the antibody (to reduce computing time) (

pdb_selres) - clean the PDB file (

pdb_tidy)

Note that the pdb_tidy -strict commands cleans the PDB file, add TER statements only between different chains).

Without the -strict option TER statements would be added between each chain break (e.g. missing residues), which should be avoided.

Note: An important part for antibodies is the pdb_fixinsert command that fixes the residue numbering of the HV loops:

Antibodies often follow the Chothia numbering scheme

and insertions created by this numbering scheme (e.g. 82A, 82B, 82C) cannot be processed by HADDOCK directly

(if not done those residues will not be considered resulting effectively in a break in the loop).

As such, renumbering is necessary before starting the docking.

This can be done from the command line with:

pdb_fetch 4G6K | pdb_tidy -strict | pdb_selchain -H | pdb_delhetatm | pdb_fixinsert | pdb_selaltloc | pdb_keepcoord | pdb_selres -1:120 | pdb_tidy -strict > 4G6K_H.pdb pdb_fetch 4G6K | pdb_tidy -strict | pdb_selchain -L | pdb_delhetatm | pdb_fixinsert | pdb_selaltloc | pdb_keepcoord | pdb_selres -1:107 | pdb_tidy -strict > 4G6K_L.pdb

We then combined the heavy and light chain into one, renumbering the residues starting at 1 to avoid overlap in residue numbering between the chains and assigning a unique chainID/segID:

Note The ready-to-use file can be found in the pdbs directory of the provided tutorial data.

Preparing the antigen structure

Using PDB-tools, we will now download the unbound structure of Interleukin-1β from the PDB database (the PDB ID is 4I1B), remove the hetero atoms and then process it to assign it chainID B.

Important: Each molecule given to HADDOCK in a docking scenario must have a unique chainID/segID.

Defining restraints for docking

Before setting up the docking, we first need to generate distance restraint files in a format suitable for HADDOCK. HADDOCK uses CNS as computational engine. A description of the format for the various restraint types supported by HADDOCK can be found in our Nature Protocol 2024 paper, Box 1.

Distance restraints are defined as follows:

assign (selection1) (selection2) distance, lower-bound correction, upper-bound correction

The lower limit for the distance is calculated as: distance minus lower-bound correction and the upper limit as: distance plus upper-bound correction.

The syntax for the selections can combine information about:

- chainID -

segidkeyword - residue number -

residkeyword - atom name -

namekeyword.

Other keywords can be used in various combinations of OR and AND statements. Please refer for that to the online CNS manual.

E.g.: a distance restraint between the CB carbons of residues 10 and 200 in chains A and B with an allowed distance range between 10Å and 20Å would be defined as follows:

assign (segid A and resid 10 and name CB) (segid B and resid 200 and name CB) 20.0 10.0 0.0

Identifying the paratope of the antibody

Nowadays several computational tools can identify the paratope (the residues of the hypervariable loops involved in binding) from the provided antibody sequence. In this tutorial, we are providing you with the corresponding list of residue obtained using ProABC-2. ProABC-2 uses a convolutional neural network to identify not only residues which are located in the paratope region but also the nature of interactions they are most likely involved in (hydrophobic or hydrophilic). The work is described in Ambrosetti, et al Bioinformatics, 2020.

The corresponding paratope residues (those with either an overall probability >= 0.4 or a probability for hydrophobic or hydrophilic > 0.3) are:

31,32,33,34,35,52,54,55,56,100,101,102,103,104,105,106,151,152,169,170,173,211,212,213,214,216

The numbering corresponds to the numbering of the 4G6K_clean.pdb PDB file.

Let us visualize those onto the 3D structure.

For this start PyMOL and load 4G6K_clean.pdb

File menu -> Open -> select 4G6K_clean.pdb

Alternatively, if PyMOL is accessible from the command line, simply type:

We will now highlight the predicted paratope residues in red. In PyMOL type the following commands:

Let us now switch to a surface representation to inspect the predicted binding site.

Inspect the surface.

Do the identified paratope residues form a well-defined patch on the surface?

See surface view of the paratope expand_more

Identifying the epitope of the antigen

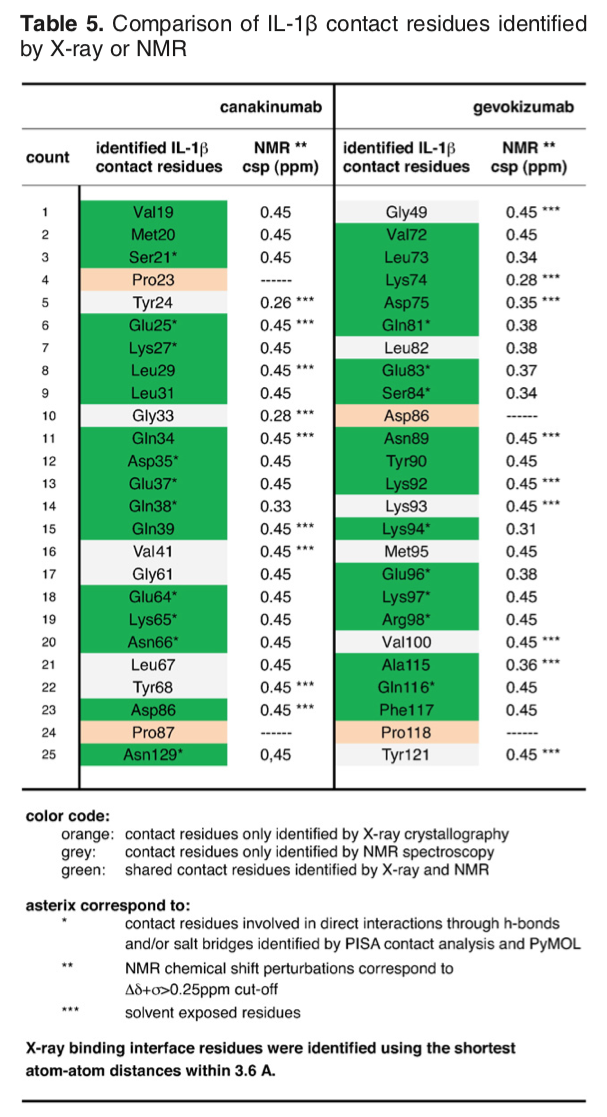

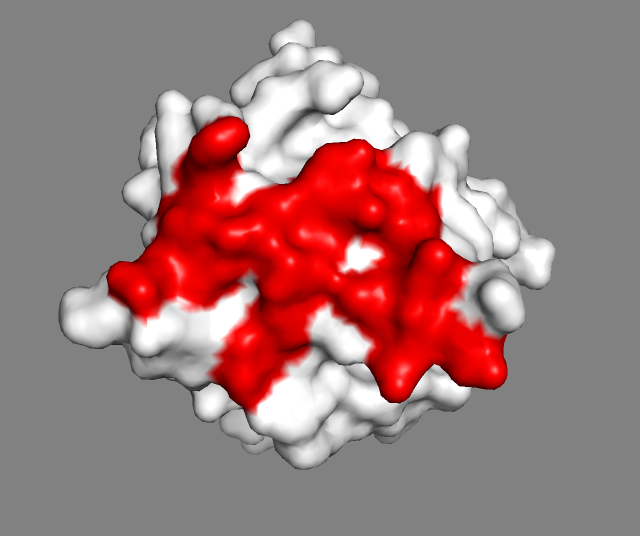

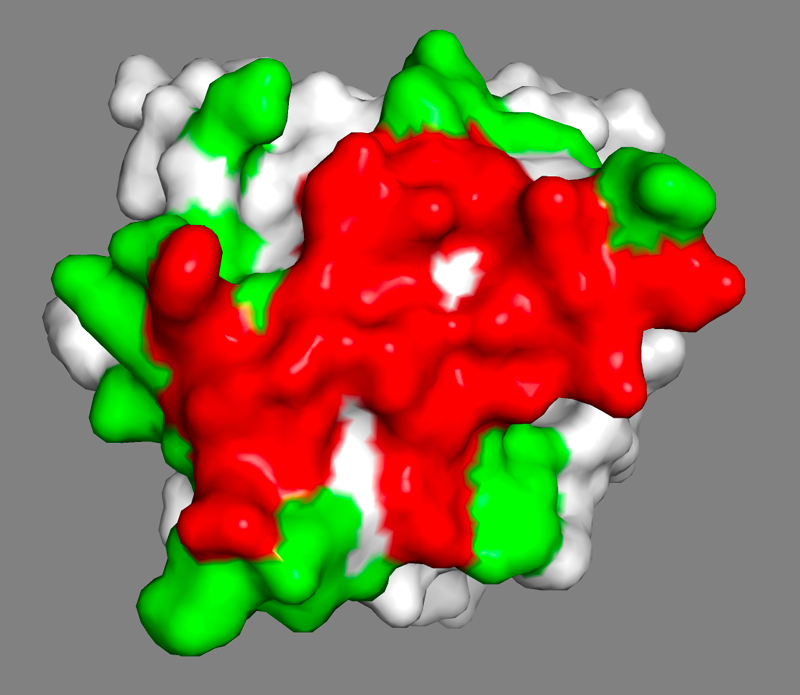

The article describing the crystal structure of the antibody-antigen complex we are modeling also reports experimental NMR chemical shift titration experiments to map the binding site of the antibody (gevokizumab) on Interleukin-1β. The residues affected by binding are listed in Table 5 of Blech et al. JMB 2013:

The list of binding site (epitope) residues identified by NMR is:

72,73,74,75,81,83,84,89,90,92,94,96,97,98,115,116,117

We will now visualize the epitope on Interleukin-1β. To do this, start PyMOL and open the provided PDB file of the antigen from the PyMOL File menu.

File menu -> Open -> select 4I1B_clean.pdb

Inspect the surface.

Do the identified residues form a well-defined patch on the surface?

The answer to that question should be yes, but we can see some residues not colored that might also be involved in the binding - there are some white spots around/in the red surface.

See surface view of the epitope identified by NMR expand_more

In HADDOCK, we are dealing with potentially incomplete binding sites by defining surface neighbors as passive residues.

These passive residues are added in the definition of the interface but do not incur any energetic penalty if they are not part of the binding site in the final models.

In contrast, residues defined as active (typically the identified or predicted binding site residues) will incur an energetic penalty.

When using the HADDOCK2.x webserver, passive residues will be automatically defined.

Here, since we are using a local version, we need to define those manually.

This can easily be done using a haddock3 command line tool in the following way:

The command prints a list of solvent accessible passive residues, which you should save to a file for further use.

We can visualize the epitope and its surface neighbors using PyMOL:

File menu -> Open -> select 4I1B_clean.pdb

See the epitope and passive residues expand_more

The NMR-identified residues and their surface neighbors generated with the above command can be used to define ambiguous interactions restraints, either using the NMR identified residues as active in HADDOCK, or combining those with the surface neighbors.

The difference between active and passive residues in HADDOCK is as follows:

Active residues: These residues are “forced” to be at the interface. If they are not part of the interface in the final models, an energetic penalty will be applied. The interface in this context is defined by the union of active and passive residues on the partner molecules.

Passive residues: These residues are expected to be at the interface. However, if they are not, no energetic penalty is applied.

In general, it is better to be too generous rather than too strict in the definition of passive residues.

An important aspect is to filter both the active (the residues identified from your mapping experiment) and passive residues by their solvent accessibility.

This is done automatically when using the haddock3-restraints passive_from_active command: residues with less that 15% relative solvent accessibility (same cutoff as the default in the HADDOCK server) are discared.

This is, however, not a hard limit, and you might consider including even more buried residues if some important chemical group seems solvent accessible from a visual inspection.

Defining ambiguous restraints

Once you have identified your active and passive residues for both molecules, you can proceed with the generation of the ambiguous interaction restraints (AIR) file for HADDOCK.

For this you can either make use of our online Generate Restraints haddock-restraints web service, entering the list of active and passive residues for each molecule,

the chainIDs of each molecule and saving the resulting restraint list to a text file, or use another haddock3-restraints sub-command.

To use our haddock3-restraints active_passive_to_ambig script, you need to

create for each molecule a file containing two lines:

- The first line corresponds to the list of active residues (numbers separated by spaces)

- The second line corresponds to the list of passive residues (numbers separated by spaces).

Important: The file must consist of two lines, but a line can be empty (e.g., if you do not want to define active residues for one molecule). However, there must be at least one set of active residue defined for one of the molecules.

- For the antibody we will use the predicted paratope as active and no passive residues defined.

The corresponding file can be found in the

restraintsdirectory asantibody-paratope.act-pass:

1 32 33 34 35 52 54 55 56 100 101 102 103 104 105 106 151 152 169 170 173 211 212 213 214 216

- For the antigen we will use the NMR-identified epitope as active and the surface neighbors as passive.

The corresponding file can be found in the

restraintsdirectory asantigen-NMR-epitope.act-pass:

72 73 74 75 81 83 84 89 90 92 94 96 97 98 115 116 117 3 24 46 47 48 50 66 76 77 79 80 82 86 87 88 91 93 95 118 119 120

Using those two files, we can generate the CNS-formatted Ambiguous Interaction Restraints (AIRs) file with the following command:

This generates a file called ambig-paratope-NMR-epitope.tbl that contains the AIRs.

Inspect the generated file and note how the ambiguous distances are defined.

View an extract of the AIR file expand_more

assign (resi 31 and segid A)

(

(resi 72 and segid B)

or

(resi 73 and segid B)

or

(resi 74 and segid B)

or

(resi 75 and segid B)

or

(resi 81 and segid B)

or

(resi 83 and segid B)

or

(resi 84 and segid B)

or

(resi 89 and segid B)

or

(resi 90 and segid B)

or

(resi 92 and segid B)

or

(resi 94 and segid B)

or

(resi 96 and segid B)

or

(resi 97 and segid B)

or

(resi 98 and segid B)

or

(resi 115 and segid B)

or

(resi 116 and segid B)

or

(resi 117 and segid B)

or

(resi 3 and segid B)

or

(resi 24 and segid B)

or

(resi 46 and segid B)

or

(resi 47 and segid B)

or

(resi 48 and segid B)

or

(resi 50 and segid B)

or

(resi 66 and segid B)

or

(resi 76 and segid B)

or

(resi 77 and segid B)

or

(resi 79 and segid B)

or

(resi 80 and segid B)

or

(resi 82 and segid B)

or

(resi 86 and segid B)

or

(resi 87 and segid B)

or

(resi 88 and segid B)

or

(resi 91 and segid B)

or

(resi 93 and segid B)

or

(resi 95 and segid B)

or

(resi 118 and segid B)

or

(resi 119 and segid B)

or

(resi 120 and segid B)

) 2.0 2.0 0.0

...

See answer expand_more

The default distance range for those is between 0 and 2Å, which might seem short but makes senses because of the 1/r^6 summation in the AIR energy function that makes the effective distance to be significantly shorter than the shortest distance entering the sum.The effective distance is calculated as the SUM over all pairwise atom-atom distance combinations between an active residue and all the active+passive on the other molecule: SUM[1/r^6]^(-1/6).

Restraints validation

If you modify manually this generated restraint files or create your own, it is possible to quickly check if the format is valid using the following haddock3-restraints sub-command:

haddock3-restraints validate_tbl ambig-paratope-NMR-epitope.tbl --silent

No output means that your TBL file is valid.

Note that this only validates the syntax of the restraint file, but does not check if the selections defined in the restraints are actually existing in your input PDB files.

Additional restraints for multi-chain proteins

As an antibody consists of two separate chains, it is important to define a few distance restraints

to keep them together during the high temperature flexible refinement stage of HADDOCK otherwise they might slightly drift appart.

This can easily be done using the haddock3-restraints restrain_bodies sub-command.

haddock3-restraints restrain_bodies 4G6K_clean.pdb > antibody-unambig.tbl

The result file contains two CA-CA distance restraints with the exact distance measured between two randomly picked CA atoms pairs:

assign (segid A and resi 110 and name CA) (segid A and resi 132 and name CA) 26.326 0.0 0.0 assign (segid A and resi 97 and name CA) (segid A and resi 204 and name CA) 19.352 0.0 0.0

This file is also provided in the restraints directory.

Setting up and running the docking with HADDOCK3

Now that we have all required files at hand (PDB and restraints files), it is time to setup our docking protocol. In this tutorial, considering we have rather good information about the paratope and epitope, we will execute a fast HADDOCK3 docking workflow, reducing the non-negligible computational cost of HADDOCK by decreasing the sampling, without impacting too much the accuracy of the resulting models.

HADDOCK3 workflow definition

The first step is to create a HADDOCK3 configuration file that will define the docking workflow. We will follow a classic HADDOCK workflow consisting of rigid body docking, semi-flexible refinement and final energy minimisation followed by clustering.

We will also integrate two analysis modules in our workflow:

caprievalwill be used at various stages to compare models to either the best scoring model (if no reference is given) or a reference structure, which in our case we have at hand (pdbs/4G6M_matched.pdb). This will directly allow us to assess the performance of the protocol. In the absence of a reference,caprievalis still usefull to assess the convergence of a run and analyse the results.contactmapadded as last module will generate contact matrices of both intra- and intermolecular contacts and a chordchart of intermolecular contacts for each cluster.

Our workflow consists of the following modules:

topoaa: Generates the topologies for the CNS engine and builds missing atomsrigidbody: Performs rigid body energy minimisation (it0in haddock2.x)caprieval: Calculates CAPRI metrics (i-RMSD, l-RMSD, Fnat, DockQ) with respect to the top scoring model or reference structure if providedseletop: Selects the top X models from the previous moduleflexref: Preforms semi-flexible refinement of the interface (it1in haddock2.4)caprievalemref: Final refinement by energy minimisation (itwEM only in haddock2.4)caprievalclustfcc: Clustering of models based on the fraction of common contacts (FCC)seletopclusts: Selects the top models of all clusterscaprievalcontactmap: Contacts matrix and a chordchart of intermolecular contacts

The corresponding toml configuration file (provided in workflows/docking-antibody-antigen.cfg) looks like:

# ====================================================================

# Antibody-antigen docking example with restraints from the antibody

# paratope to the NMR-identified epitope on the antigen

# ====================================================================

# Directory in which the scoring will be done

run_dir = "run1"

# Compute mode

mode = "local"

ncores = 50

# Self contained rundir (to avoid problems with long filename paths)

self_contained = true

# Post-processing to generate statistics and plots

postprocess = true

clean = true

molecules = [

"pdbs/4G6K_clean.pdb",

"pdbs/4I1B_clean.pdb"

]

# ====================================================================

# Parameters for each stage are defined below, prefer full paths

# ====================================================================

[topoaa]

[rigidbody]

# CDR to NMR epitope ambig restraints

ambig_fname = "restraints/ambig-paratope-NMR-epitope.tbl"

# Restraints to keep the antibody chains together

unambig_fname = "restraints/antibody-unambig.tbl"

# Reduced sampling (100 instead of the default of 1000)

sampling = 100

[caprieval]

reference_fname = "pdbs/4G6M_matched.pdb"

[seletop]

# Selection of the top 50 best scoring complexes (instead of the default of 200)

select = 50

[flexref]

tolerance = 5

# CDR to NMR epitope ambig restraints

ambig_fname = "restraints/ambig-paratope-NMR-epitope.tbl"

# Restraints to keep the antibody chains together

unambig_fname = "restraints/antibody-unambig.tbl"

[caprieval]

reference_fname = "pdbs/4G6M_matched.pdb"

[emref]

tolerance = 5

# CDR to NMR epitope ambig restraints

ambig_fname = "restraints/ambig-paratope-NMR-epitope.tbl"

# Restraints to keep the antibody chains together

unambig_fname = "restraints/antibody-unambig.tbl"

[caprieval]

reference_fname = "pdbs/4G6M_matched.pdb"

[clustfcc]

plot_matrix = true

[seletopclusts]

# Selection of the top 4 best scoring complexes from each cluster

top_models = 4

[caprieval]

reference_fname = "pdbs/4G6M_matched.pdb"

[contactmap]

# ====================================================================In this case, since we have information for both interfaces we use a low-sampling configuration file, which takes only a small amount of computational resources to run.

The initial sampling parameter at the rigid-body energy minimization (rigidbody) module is set to 100 models, of which only best the 40 are passed to the flexible refinement (flexref) module with the seletop module.

The subsequence flexible refinement (flexref module) and energy minimisation (emref) modules will use all models passed by the seletop module.

FCC clustering (clustfcc) is then applied to group together models sharing a consistent fraction of the interface contacts.

The top 4 models of each cluster are saved to disk (seletopclusts).

Multiple caprieval modules are executed at different stages of the workflow to check how the quality (and rankings) of the models change throughout the protocol.

In this case we are providing the known crystal structure of the complex as reference.

Note: For making best use of the available CPU resources it is recommended to adapt the sampling parameter to be a multiple of the number of available cores when running in local mode. For this reason, for the ASM HPC/AI school the sampling is set to be a multiple of 48.

Note: In case no reference is available (the usual scenario), the best ranked model is used as reference for each stage.

Including caprieval at the various stages even when no reference is provided is useful to get the rankings and scores and visualise the results (see Analysis section below).

Note: The default sampling would be 1000 models for rigidbody of which 200 are passed to the flexible refinement in seletop.

As an indication of the computational requirements, the default sampling worflow for this tutorial completes in about 37min using 12 cores on a MaxOSX M2 processor.

In comparison, the reduced sampling run (100/40) takes about 7-8min.

Note: To get a list of all possible parameters that can be defined in a specific module (and their default values) you can use the following command:

Add the -d option to get a more detailed description of parameters and use the -h option to see a list of arguments and options.

Alternatively, you can consult the developer’s guide, where each parameter of each module is listed along with their default values, short and long descriptions, etc. Navigate to the Modules and select a module which parameters you want to display.

Note that, in contrast to HADDOCK2.X, we have much more flexibility in defining our workflow. As an example, we could use this flexibility by introducing a clustering step after the initial rigid-body docking stage, selecting a given number of models per cluster and refining all of those. For an example of this strategy see the 4 section about ensemble docking.

Running HADDOCK3

In in the first section of the workflow above we have a parameter mode defining the execution mode. HADDOCK3 currently supports three difference execution modes:

- local : In this mode, HADDOCK3 will run on the current system, using the defined number of cores (

ncores) in the config file to a maximum of the total number of available cores on the system. - batch: In this mode, HADDOCK3 will typically be started on your local server (e.g. the login node) and will dispatch jobs to the batch system of your cluster (slurm and torque are currently supported).

- mpi: HADDOCK3 also supports a pseudo parallel MPI implementation, which allows to harvest the power of multiple nodes to distribute the computations (functional but still very experimental at this stage).

Execution of HADDOCK3 on the computers of the BioExcel 2025 summerschool

To execute the HADDOCK3 workflow on the computational resources provided for this workshop, we will simply run in local mode, calling haddock3 with as argument the workflow you want to execute.

haddock3 <my-workflow-configuration-file>Alternatively redirect the output to a log file and send haddock3 to the background.

As an indication, running locally on an Apple M2 laptop using 10 cores, this workflow completed in 7 minutes.

Execution of HADDOCK3 on DISCOVERER (BioExcel Sofia May 2025 workshop)

View execution instructions for running HADDOCK3 on DISCOVERER expand_more

To execute the HADDOCK3 workflow on the computational resources provided for this workshop, you should create an execution script contain specific requirements for the queueing system and the HADDOCK3 configuration and execution. An example slurm script is provided with the data you unzipped:run-haddock3-discoverer.sh#!/bin/bash

#SBATCH --nodes=1

#SBATCH --account=school-01

#SBATCH --qos=school-01

#SBATCH --job-name "haddock3"

#SBATCH --tasks-per-node=50

#SBATCH --mem-per-cpu 1500

#SBATCH --time 04:00:00

module load python/3/3.12

module load haddock3/2025.5.0

haddock3 workflows/docking-antibody-antigen.cfgExecution of HADDOCK3 on the TRUBA resources (EuroCC Istanbul April 2024 workshop)

View execution instructions for running HADDOCK3 the TRUBA resources expand_more

To execute the HADDOCK3 workflow on the computational resources provided for this workshop, you should create an execution script contain specific requirements for the queueing system and the HADDOCK3 configuration and execution. Two scripts are provided with the data you unzipped, one for execution on the hamsri cluster and one for the barbun cluster:run-haddock3-barbun.sh

run-haddock3-hamsri.sh#!/bin/bash

#SBATCH --nodes=1

#SBATCH --tasks-per-node=54

#SBATCH -C weka

#SBATCH -p hamsi

#SBATCH --time 00:30:00

source ~egitim/HADDOCK/haddock3/.venv/bin/activate

haddock3 workflows/docking-antibody-antigen.cfgsbatch run-haddock3-hamsri.shExecution of HADDOCK3 on Fugaku (ASM 2026 HPC/AI school, Kobe Japan)

View execution instructions for running HADDOCK3 on Fugaku expand_more

To execute the workflow on Fugaku, you can either start an interactive session or create a job file that will execute HADDOCK3 on a node, with HADDOCK3 running in local mode (the setup in the above configuration file with mode="local") and harvesting all core of that node (ncores=48).Interactive session on a node:

pjsub -x PJM_LLIO_GFSCACHE=/vol0003:/vol0004 -g "hp250477" --interact -L "node=1" - -L "rscgrp=int" -L "elapse=2:00:00" --sparam "wait-time=600"source /vol0300/data/hp250477/Materials/Life_Science/20250202_Bonvin/haddock3/.venv/bin/activate<br>haddock3 ./workflows/docking-antibody-antigen.cfgFor this execution mode you should create an execution script contain specific requirements for the queueing system and the HADDOCK3 configuration and execution. Here is an example of such an execution script (also provided in the HADDOCK3-antibody-antigen directory as run-haddock3-fugaku.sh):

#!/bin/sh -x

#PJM -L "node=1" # Assign node 1 node

#PJM -L "rscgrp=small" # Specify resource group

#PJM -L "elapse=02:00:00" # Elapsed time limit 1 hour

#PJM -g hp250477 # group name

#PJM -x PJM_LLIO_GFSCACHE=/vol0003:/vol0004 # volume names that job uses

#PJM -s # Statistical information output

source /vol0300/data/hp250477/Materials/Life_Science/20260202-HADDOCK/haddock3/.venv/bin/activate

haddock3 ./workflows/docking-antibody-antigen.cfgpjsub run-haddock3-fugaku.shAnd you can check the status in the queue using pjstat. This run should take about 25 minutes to complete on a single node using 48 arm cores.

Execution of HADDOCK3 on ADD Ljubljana (BioExcel Adriatic edition 2026, Ljubljana, Slovenia)

To execute the workflow, you can either start an interactive session or create a job file that will execute HADDOCK3 on a node,

with HADDOCK3 running in local mode (the setup in the above configuration file with mode=”local”) and harvesting all core of that node (ncores=16).

Start an interactive session on a node:

salloc --job-name=interactive_haddock3 --partition=amd --nodes=1 --cpus-per-task=16 --time-min=120Once the session is active, activate HADDOCK3 with:

source /home/vreys/haddock3/.haddock3-env/bin/activate<br>You can then follow the tutorial and run all the commands present in it, such as starting a haddock3 docking workflow with:

haddock3 ./workflows/docking-antibody-antigen.cfgJob submission to the batch system:

For this execution mode you should create an execution script contain specific requirements for the queueing system and the HADDOCK3 configuration and execution.

Here is an example of such an execution script (that can be saved under the name run-haddock3-slurm.sh):

#!/bin/bash

#SBATCH --partition=amd

#SBATCH --job-name=haddock3_run

#SBATCH --nodes=1

#SBATCH --cpus-per-task=16

#SBATCH --time-min=120

#SBATCH --output="haddock3_run_log.txt"

# Source the environement

source /home/vreys/haddock3/.haddock3-env/bin/activate

# Go to the appropriate directory

cd ~/HADDOCK3-antibody-antigen

# Launch haddock3

haddock3 workflows/docking-antibody-antigen.cfgThis file should be submitted to the batch system using the sbatch command:

sbatch run-haddock3-slurm.shAnd you can check the status in the queue using squeue -u Username.

Also, you can follow the state of your run by looking a the content of either the log file or the slurm output using:

tail -f haddock3_run_log.txtThis run should take around 20 minutes to complete on a single node using 16 arm cores.

Learn more about the various execution modes of haddock3

Local execution expand_more

In this mode HADDOCK3 will run on the current system, using the defined number of cores (ncores) in the config file to a maximum of the total number of available cores on the system minus one. An example of the relevant parameters to be defined in the first section of the config file is:# compute mode

mode = "local"

# 1 nodes x 50 ncores

ncores = 50haddock3 <my-workflow-configuration-file>haddock3 <my-workflow-configuration-file> > haddock3.log &Exection in batch mode using slurm expand_more

Here is an example script for submitting via the slurm batch system:#!/bin/bash

#SBATCH --nodes=1

#SBATCH --tasks-per-node=50

#SBATCH -J haddock3

#SBATCH --partition=medium

# activate the haddock3 conda environment

source $HOME/miniconda3/etc/profile.d/conda.sh

conda activate haddock3

# go to the run directory

cd $HOME/HADDOCK3-antibody-antigen

# execute

haddock3 <my-workflow-configuration-file>In this mode HADDOCK3 will typically be started on your local server (e.g. the login node) and will dispatch jobs to the batch system of your cluster. Two batch systems are currently supported: slurm and torque (defined by the batch_type parameter). In the configuration file you will have to define the queue name and the maximum number of concurrent jobs sent to the queue (queue_limit). Since HADDOCK3 single model calculations are quite fast, it is recommended to calculate multiple models within one job submitted to the batch system. he number of model per job is defined by the concat parameter in the configuration file. You want to avoid sending thousands of very short jobs to the batch system if you want to remain friend with your system administrators... An example of the relevant parameters to be defined in the first section of the config file is:

# compute mode

mode = "batch"

# batch system

batch_type = "slurm"

# queue name

queue = "short"

# number of concurrent jobs to submit to the batch system

queue_limit = 100

# number of models to produce per submitted job

concat = 10

Exection in MPI mode expand_more

HADDOCK3 supports a parallel pseudo-MPI implementation. For this to work, the mpi4py library must have been installed at installation time. Refer to the (MPI-related instructions). The execution mode should be set to `mpi` and the total number of cores should match the requested resources when submitting to the batch system. An example of the relevant parameters to be defined in the first section of the config file is:# compute mode

mode = "mpi"

# 5 nodes x 50 tasks = ncores = 250

ncores = 250 #!/bin/bash

#SBATCH --nodes=5

#SBATCH --tasks-per-node=50

#SBATCH -J haddock3mpi

# Make sure haddock3 is activated

source $HOME/miniconda3/etc/profile.d/conda.sh

conda activate haddock3

# go to the run directory

# edit if needed to specify the correct location

cd $HOME/HADDOCK3-antibody-antigen

# execute

haddock3 \<my-workflow-configuration-file\>

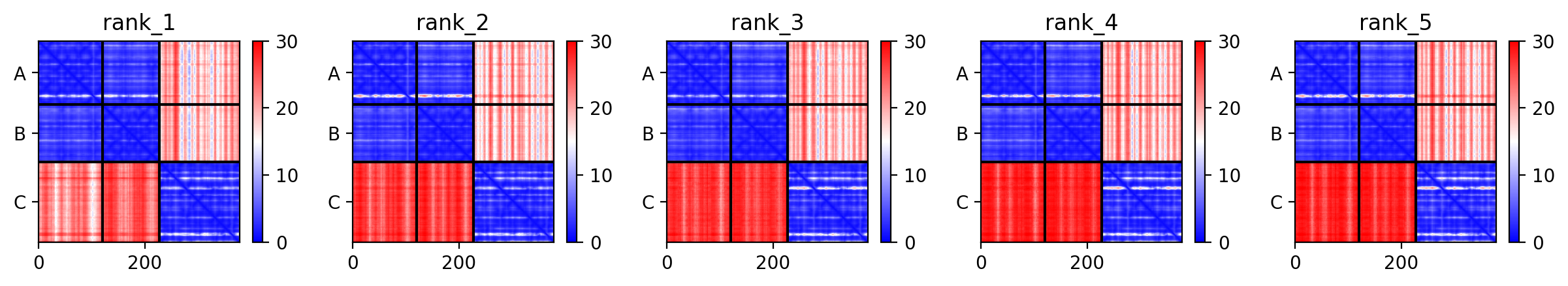

Analysis of docking results

In case something went wrong with the docking (or simply if you do not want to wait for the results) you can find the following precalculated runs in the runs directory:

run1: docking run created using the unbound antibody.run1-af2: docking run created using the Alphafold-multimer antibody (see 3).run1-abb: docking run created using the Immunebuilder antibody (see 3).run1-ens: docking run created using an ensemble of antibody models (see 4).run-scoring: scoring run created using various models obtained at the previous stages (see 6).

Once your run has completed - inspect the content of the resulting directory. You will find the various steps (modules) of the defined workflow numbered sequentially starting at 0, e.g.:

> ls run1/

00_topoaa/

01_rigidbody/

02_caprieval/

03_seletop/

04_flexref/

05_caprieval/

06_emref/

07_caprieval/

08_clustfcc/

09_seletopclusts/

10_caprieval/

11_contactmap/

analysis/

data/

log

toppar/

traceback/In addition, there is a log file (text file) and four additional directories:

- the

analysisdirectory contains various plots to visualize the results for each caprieval step and a general report (report.html) that provides all statistics with various plots. You can open this file in your preferred web browser - the

datadirectory contains the input data (PDB and restraint files) for the various modules, as well as an input workflow (inconfigurationsdirectory) - the

toppardirectory contains the force field topology and parameter files (only present when running in self-contained mode) - the

tracebackdirectory containstraceback.tsv, which links all models to see which model originates from which throughout all steps of the workflow.

You can find information about the duration of the run at the bottom of the log file.

Each sampling/refinement/selection module will contain PDB files.

For example, the 09_seletopclusts directory contains the selected models from each cluster. The clusters in that directory are numbered based

on their rank, i.e. cluster_1 refers to the top-ranked cluster. Information about the origin of these files can be found in that directory in the seletopclusts.txt file.

The simplest way to extract ranking information and the corresponding HADDOCK scores is to look at the XX_caprieval directories (which is why it is a good idea to have it as the final module, and possibly as intermediate steps). This directory will always contain a capri_ss.tsv single model statistics file, which contains the model names, rankings and statistics (score, iRMSD, Fnat, lRMSD, ilRMSD and dockq score). E.g. for 10_caprieval:

model md5 caprieval_rank score irmsd fnat lrmsd ilrmsd dockq rmsd cluster_id cluster_ranking model-cluster_ranking air angles bonds bsa cdih coup dani desolv dihe elec improper rdcs rg sym total vdw vean xpcs

../09_seletopclusts/cluster_1_model_1.pdb - 1 -140.319 0.908 0.897 2.205 1.451 0.855 1.016 3 1 1 133.760 0.000 0.000 2010.880 0.000 0.000 0.000 7.010 0.000 -605.174 0.000 0.000 0.000 0.000 -511.084 -39.671 0.000 0.000

../09_seletopclusts/cluster_1_model_2.pdb - 2 -137.507 0.879 0.948 1.951 1.354 0.881 0.989 3 1 2 189.059 0.000 0.000 1913.390 0.000 0.000 0.000 3.243 0.000 -521.143 0.000 0.000 0.000 0.000 -387.512 -55.428 0.000 0.000

../09_seletopclusts/cluster_1_model_3.pdb - 3 -126.481 1.052 0.914 3.038 1.958 0.824 1.293 3 1 3 127.044 0.000 0.000 1816.780 0.000 0.000 0.000 -2.884 0.000 -426.677 0.000 0.000 0.000 0.000 -350.599 -50.966 0.000 0.000

../09_seletopclusts/cluster_1_model_4.pdb - 4 -102.227 1.334 0.793 2.331 2.292 0.760 1.341 3 1 4 128.628 0.000 0.000 1837.970 0.000 0.000 0.000 12.344 0.000 -410.669 0.000 0.000 0.000 0.000 -327.341 -45.299 0.000 0.000

../09_seletopclusts/cluster_2_model_1.pdb - 5 -102.077 14.789 0.103 23.359 22.787 0.077 14.405 2 2 1 163.844 0.000 0.000 1888.310 0.000 0.000 0.000 2.575 0.000 -348.025 0.000 0.000 0.000 0.000 -235.613 -51.431 0.000 0.000

...

If clustering was performed prior to calling the caprieval module, the capri_ss.tsv file will also contain information about to which cluster the model belongs to and its ranking within the cluster.

The relevant statistics are:

- score: the HADDOCK score (arbitrary units)

- irmsd: the interface RMSD, calculated over the interfaces the molecules

- fnat: the fraction of native contacts

- lrmsd: the ligand RMSD, calculated on the ligand after fitting on the receptor (1st component)

- ilrmsd: the interface-ligand RMSD, calculated over the interface of the ligand after fitting on the interface of the receptor (more relevant for small ligands for example)

- dockq: the DockQ score, which is a combination of irmsd, lrmsd and fnat and provides a continuous scale between 1 (exactly equal to reference) and 0

Various other terms are also reported including:

- bsa: the buried surface area (in squared angstroms)

- elec: the intermolecular electrostatic energy

- vdw: the intermolecular van der Waals energy

- desolv: the desolvation energy

The iRMSD, lRMSD and Fnat metrics are the ones used in the blind protein-protein prediction experiment CAPRI (Critical PRediction of Interactions).

In CAPRI the quality of a model is defined as (for protein-protein complexes):

- acceptable model: i-RMSD < 4Å or l-RMSD < 10Å and Fnat > 0.1 (0.23 < DOCKQ < 0.49)

- medium quality model: i-RMSD < 2Å or l-RMSD < 5Å and Fnat > 0.3 (0.49 < DOCKQ < 0.8)

- high quality model: i-RMSD < 1Å or l-RMSD < 1Å and Fnat > 0.5 (DOCKQ > 0.8)

In case where the caprieval module is called after a clustering step, an additional capri_clt.tsv file will be present in the directory.

This file contains the cluster ranking and score statistics, averaged over the minimum number of models defined for clustering

(4 by default), with their corresponding standard deviations. E.g.:

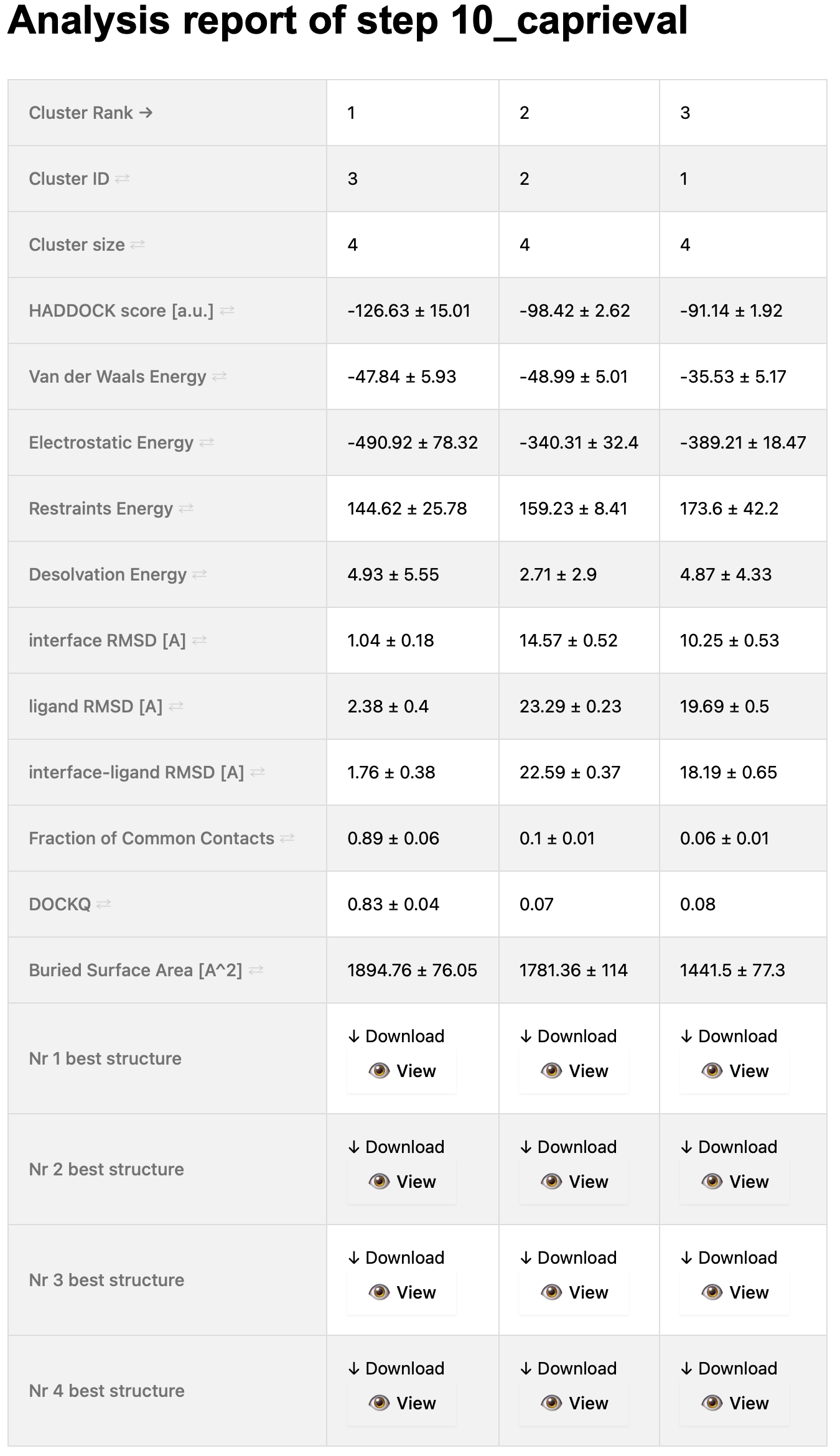

cluster_rank cluster_id n under_eval score score_std irmsd irmsd_std fnat fnat_std lrmsd lrmsd_std dockq dockq_std ilrmsd ilrmsd_std rmsd rmsd_std air air_std bsa bsa_std desolv desolv_std elec elec_std total total_std vdw vdw_std caprieval_rank

1 3 4 - -126.634 15.010 1.044 0.180 0.888 0.058 2.381 0.403 0.830 0.045 1.764 0.382 1.160 0.159 144.623 25.775 1894.755 76.054 4.928 5.550 -490.916 78.318 -394.134 70.848 -47.841 5.927 1

2 2 4 - -98.425 2.624 14.572 0.524 0.095 0.009 23.293 0.233 0.074 0.002 22.593 0.371 14.300 0.194 159.227 8.415 1781.358 114.002 2.706 2.898 -340.312 32.395 -230.077 26.771 -48.992 5.015 2

3 1 4 - -91.137 1.918 10.249 0.530 0.056 0.007 19.692 0.505 0.078 0.005 18.190 0.649 10.554 0.495 173.598 42.201 1441.505 77.296 4.873 4.329 -389.212 18.467 -251.141 40.747 -35.527 5.170 3

...

In this file you find the cluster rank (which corresponds to the naming of the clusters in the previous seletop directory), the cluster ID (which is related to the size of the cluster, 1 being always the largest cluster), the number of models (n) in the cluster and the corresponding statistics (averages + standard deviations). The corresponding cluster PDB files will be found in the preceeding 09_seletopclusts directory.

While these simple text files can be easily checked from the command line already, they might be cumbersome to read.

For that reason, we have developed a post-processing analysis that automatically generates html reports for all caprieval steps in the workflow.

These are located in the respective analysis/XX_caprieval directories and can be viewed using your favorite web browser.

Cluster statistics

Let us now analyse the docking results. Use for that either your own run or a pre-calculated run provided in the runs directory.

Go into the analysis/10_caprieval_analysis directory of the respective run directory (if needed copy the run or that directory to your local computer) and open in a web browser the report.html file. Be patient as this page contains interactive plots that may take some time to generate.

On the top of the page, you will see a table that summarises the cluster statistics (taken from the capri_clt.tsv file).

The columns (corresponding to the various clusters) are sorted by default on the cluster rank, which is based on the HADDOCK score (found on the 4th row of the table).

As this is an interactive table, you can sort it as you wish by using the arrows present in the first column.

Simply click on the arrows of the term you want to use to sort the table (and you can sort it in ascending or descending order).

A snapshot of this table is shown below:

You can also view this report online here

Note that in case the PDB files are still compressed (gzipped) the download links will not work. Also online visualisation is not enabled. To overcome this disk space storge solution, consider adding the global parameter clean = true at the begining of your configuration file.

Inspect the final cluster statistics

How many clusters have been generated?

Since for this tutorial we have at hand the crystal structure of the complex, we provided it as reference to the caprieval modules.

This means that the iRMSD, lRMSD, Fnat and DockQ statistics report on the quality of the docked model compared to the reference crystal structure.

How many clusters of acceptable or better quality have been generate according to CAPRI criteria?

What is the rank of the best cluster generated?

What is the rank of the first acceptable of better cluster generated?

Visualizing the scores and their components

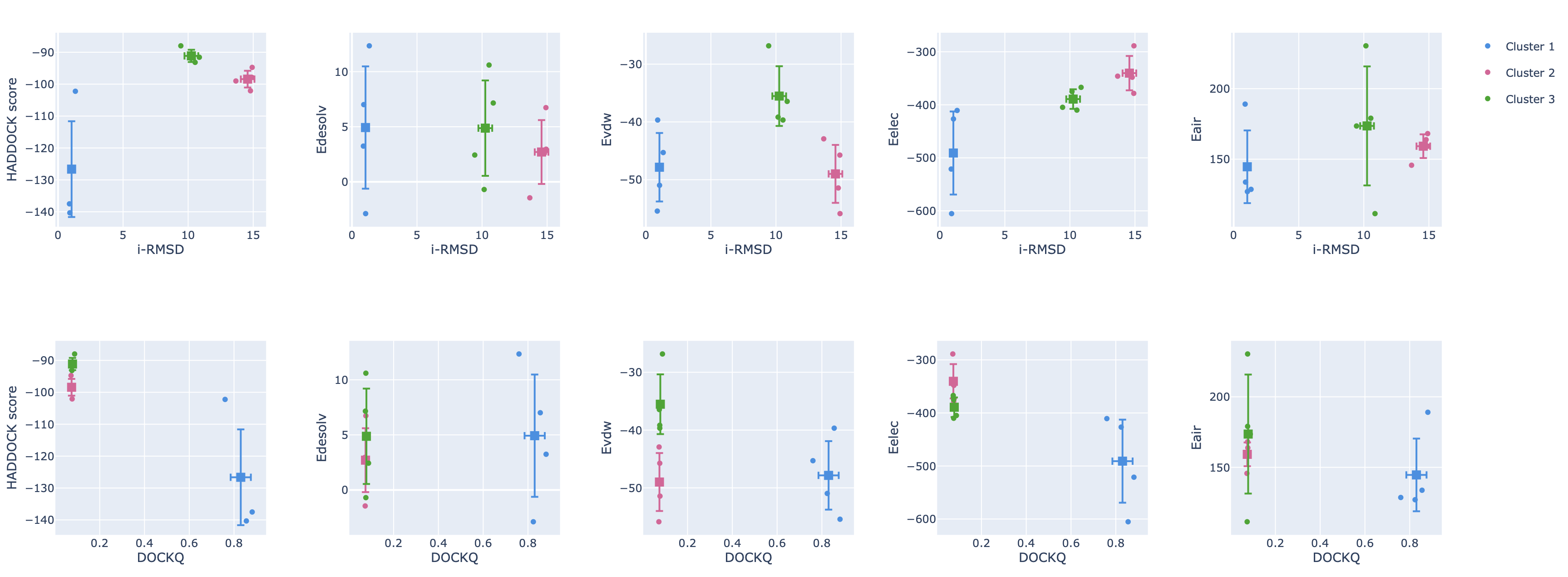

Next to the cluster statistic table shown above, the report.html file also contains a variety of plots displaying the HADDOCK score

and its components against various CAPRI metrics (i-RMSD, l-RMSD, Fnat, Dock-Q) with a color-coded representation of the clusters.

These are interactive plots. A menu on the top right of the first row (you might have to scroll to the rigth to see it)

allows you to zoom in and out in the plots and turn on and off clusters.

As a reminder, you can also view this report online here

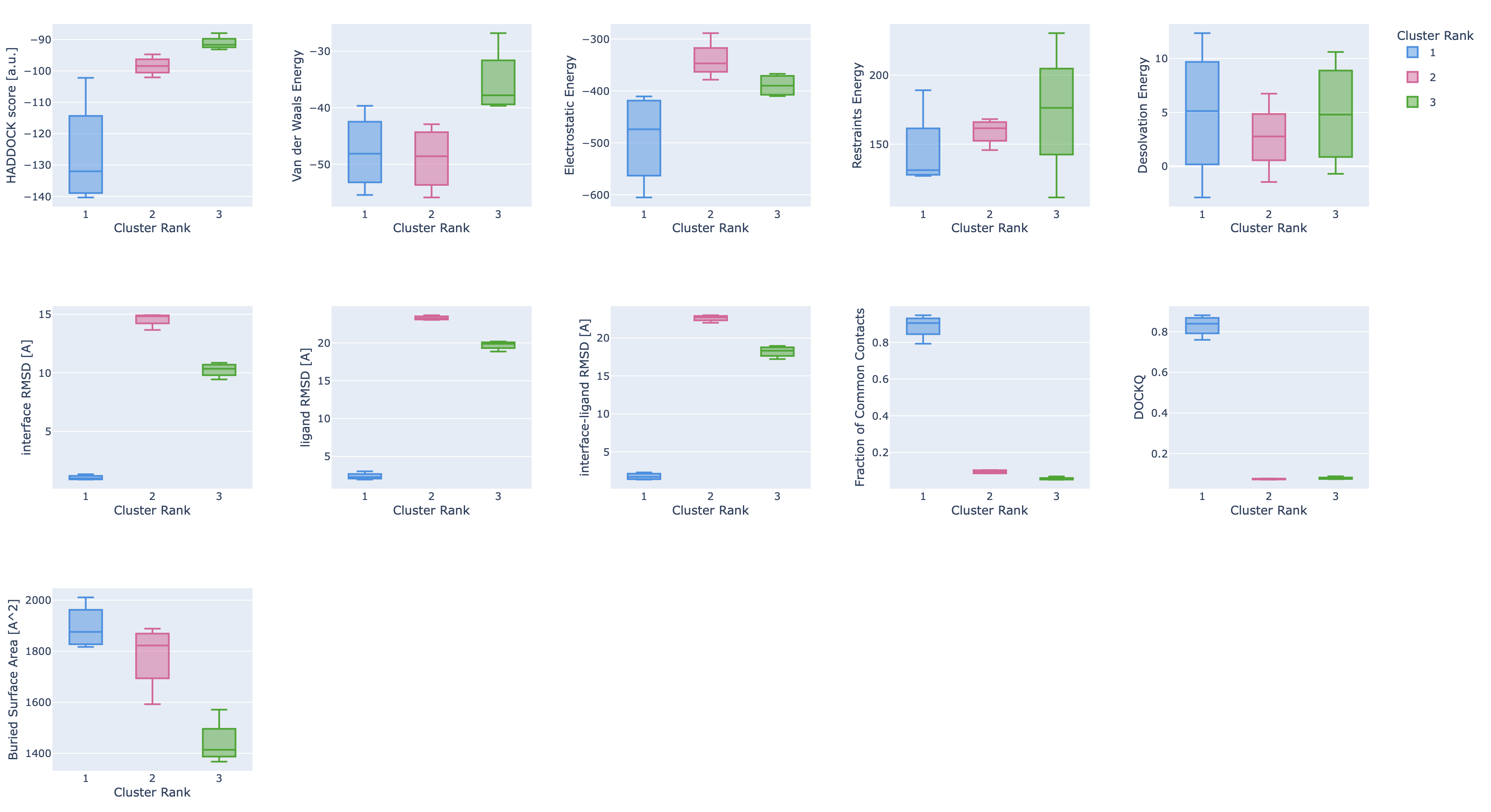

Finally, the report also shows plots of the cluster statistics (distributions of values per cluster ordered according to their HADDOCK rank):

Some single structure analysis

Going back to command line analysis, we are providing in the scripts directory a simple script that extracts some model statistics for acceptable or better models from the caprieval steps.

To use it, simply call the script with as argument the run directory you want to analyze, e.g.:

./scripts/extract-capri-stats.sh ./runs/run1

View the output of the script expand_more

============================================== == runs/run1/02_caprieval/capri_ss.tsv ============================================== Total number of acceptable or better models: 25 out of 100 Total number of medium or better models: 15 out of 100 Total number of high quality models: 1 out of 100 First acceptable model - rank: 1 i-RMSD: 1.196 Fnat: 0.672 DockQ: 0.741 First medium model - rank: 1 i-RMSD: 1.196 Fnat: 0.672 DockQ: 0.741 Best model - rank: 17 i-RMSD: 0.982 Fnat: 0.759 DockQ: 0.774 ============================================== == runs/run1/05_caprieval/capri_ss.tsv ============================================== Total number of acceptable or better models: 14 out of 40 Total number of medium or better models: 14 out of 40 Total number of high quality models: 5 out of 40 First acceptable model - rank: 1 i-RMSD: 0.992 Fnat: 0.897 DockQ: 0.834 First medium model - rank: 1 i-RMSD: 0.992 Fnat: 0.897 DockQ: 0.834 Best model - rank: 11 i-RMSD: 0.789 Fnat: 0.776 DockQ: 0.842 ============================================== == runs/run1/07_caprieval/capri_ss.tsv ============================================== Total number of acceptable or better models: 14 out of 40 Total number of medium or better models: 14 out of 40 Total number of high quality models: 3 out of 40 First acceptable model - rank: 1 i-RMSD: 1.037 Fnat: 0.931 DockQ: 0.841 First medium model - rank: 1 i-RMSD: 1.037 Fnat: 0.931 DockQ: 0.841 Best model - rank: 11 i-RMSD: 0.841 Fnat: 0.897 DockQ: 0.875 ============================================== == runs/run1/10_caprieval/capri_ss.tsv ============================================== Total number of acceptable or better models: 4 out of 12 Total number of medium or better models: 4 out of 12 Total number of high quality models: 1 out of 12 First acceptable model - rank: 1 i-RMSD: 1.037 Fnat: 0.931 DockQ: 0.841 First medium model - rank: 1 i-RMSD: 1.037 Fnat: 0.931 DockQ: 0.841 Best model - rank: 3 i-RMSD: 0.908 Fnat: 0.897 DockQ: 0.855

Note that this kind of analysis only makes sense when we know the reference complex and for benchmarking / performance analysis purposes.

Look at the single structure statistics provided by the script

Answer expand_more

In terms of iRMSD values, we only observe very small differences in the best model. The fraction of native contacts and the DockQ scores are however improving much more after flexible refinement but increases again slightly after final minimisation. All this will of course depend on how different are the bound and unbound conformations and the amount of data used to drive the docking process. In general, from our experience, the more and better data at hand, the larger the conformational changes that can be induced.

Is the best model always ranked first?

Answer expand_more

This is not the case. The scoring function is not perfect, but does a reasonable job at ranking models of acceptable or better quality on top in this case.

Note: A similar script to extract cluster statistics is available in the scripts directory as extract-capri-stats-clt.sh.

Contacts analysis

We have recently added a new contact analysis module to HADDOCK3 that generates for each cluster both a contact matrix of the entire system showing all contacts within a 4.5Å cutoff and a chord chart representation of intermolecular contacts.

In the current workflow we run, those files can be found in the 11_contactmap directory.

These are again html files with interactive plots (hover with your mouse over the plots).

This file taken from the pre-computed run can also directly be visualized here

Can you identify which residue(s) make(s) the most intermolecular contacts?

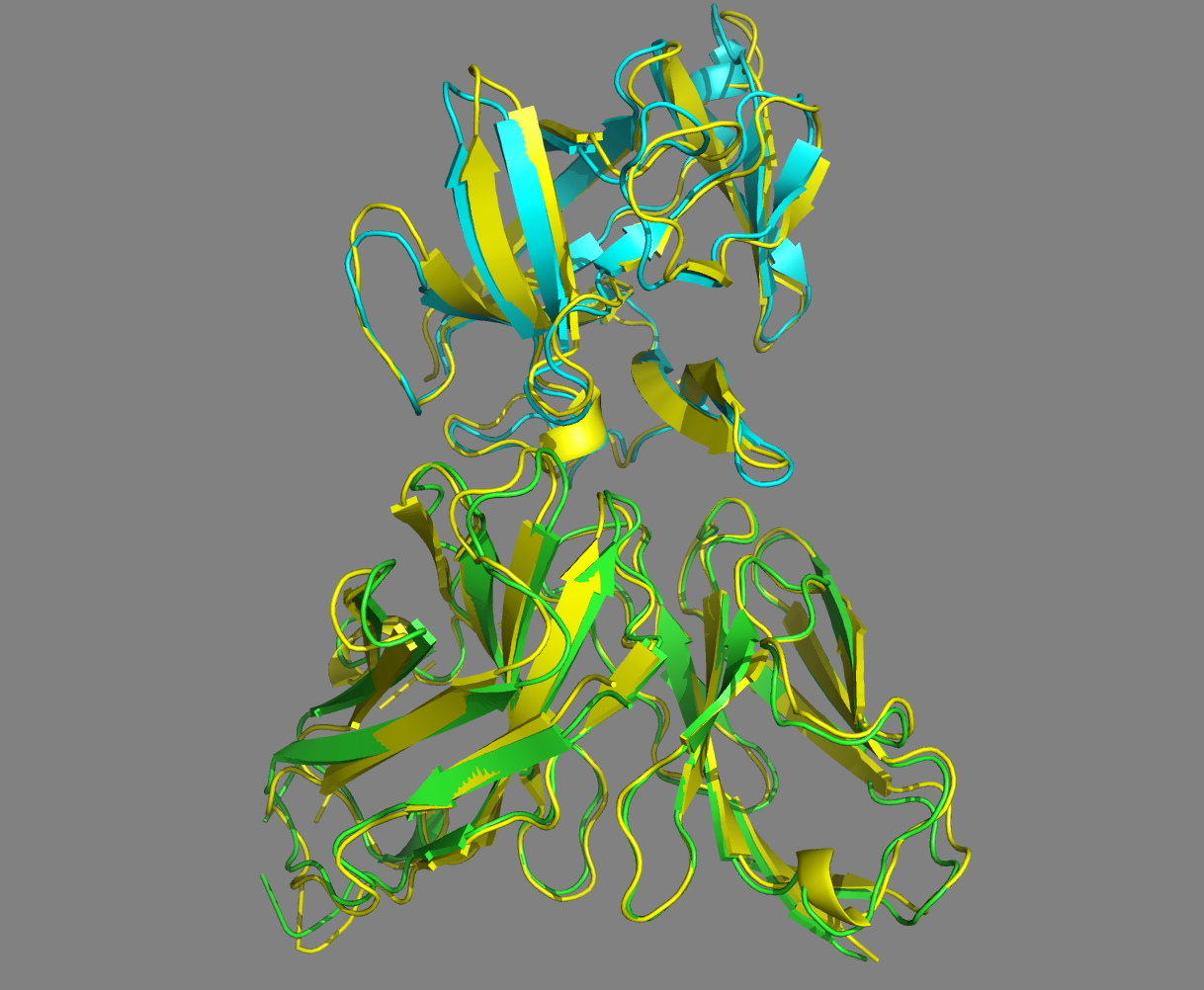

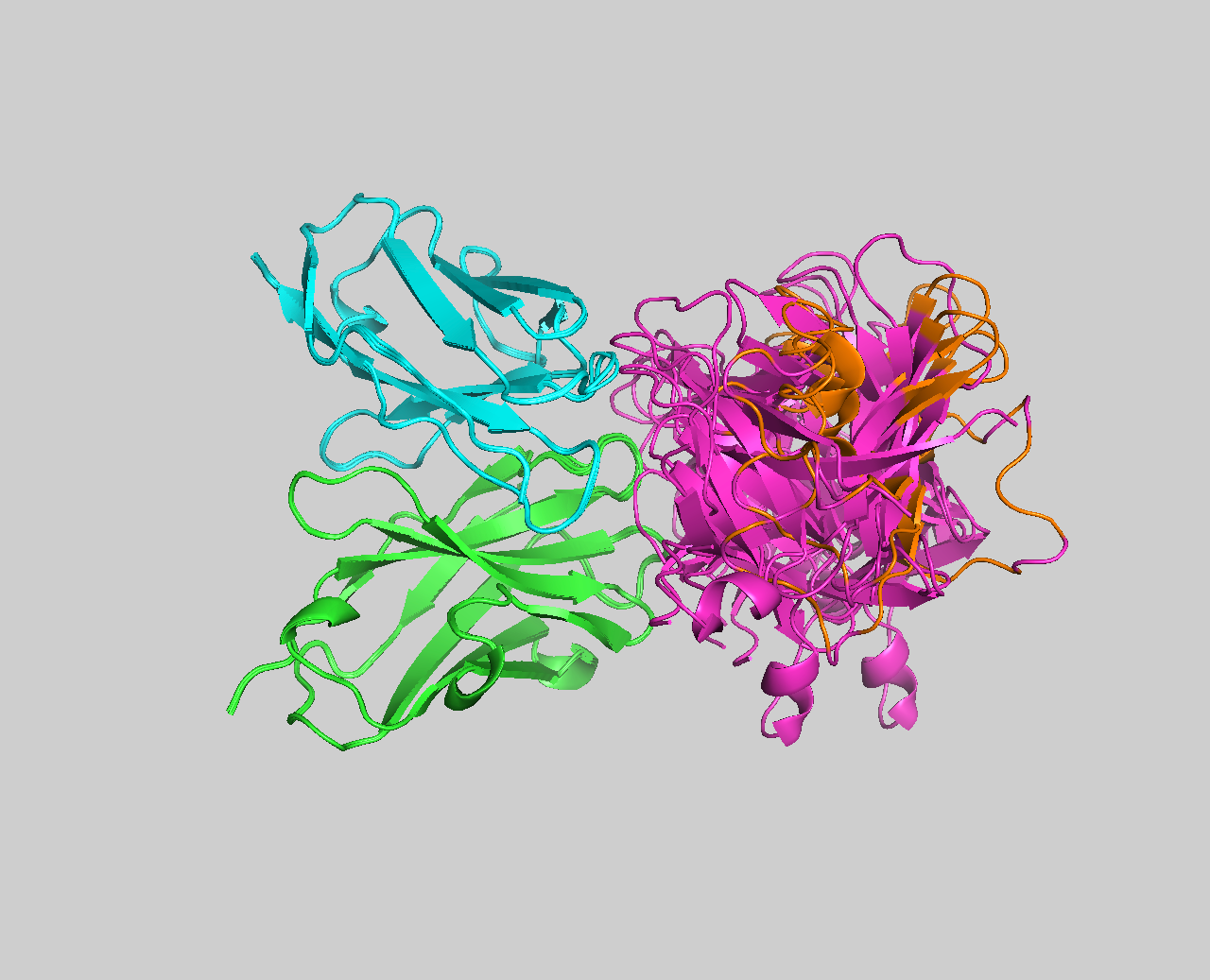

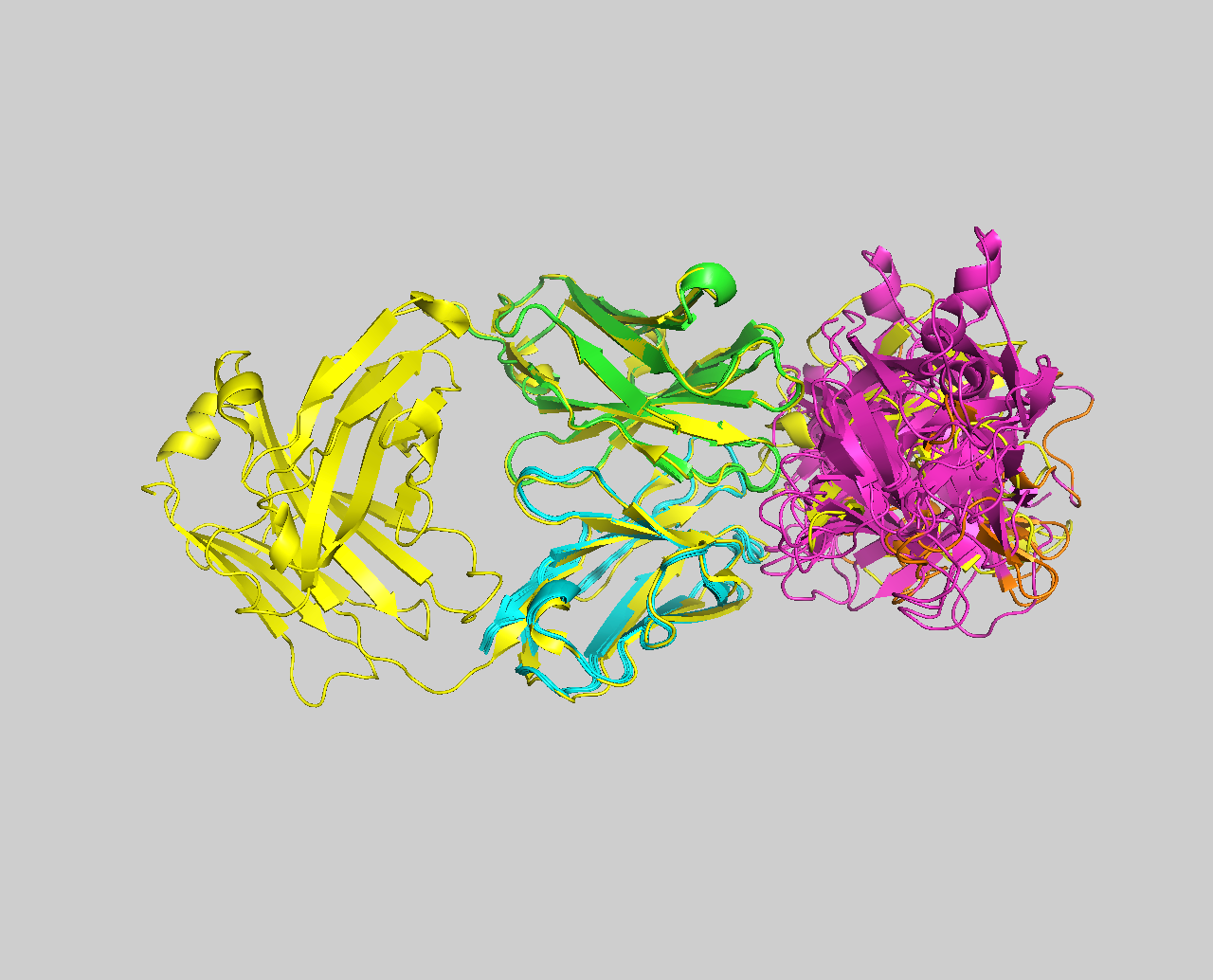

Visualization of the models

To visualize the models from the top cluster of your favorite run, start PyMOL and load the cluster representatives you want to view, e.g. this could be the top model of cluster 1, 2 or 3, located in XX_seletopclusts directory of the run. Precalcuated models can be found in the runs/run1/09_seletopclusts/ directory.

File menu -> Open -> select cluster_1_model_1.pdb

Note that the PDB files are compressed (gzipped) by default at the end of a run. You can uncompress those with the gunzip command. PyMOL can directly read the gzipped files.

If you want to get an impression of how well-defined a cluster is, repeat this for the best N models you want to view (cluster_1_model_X.pdb).

Also load the reference structure from the pdbs directory, 4G6M-matched.pdb.

File menu -> Open -> select 4G6M-matched.pdb

Once all files have been loaded, type in the PyMOL command window:

show cartoon

util.cbc

color yellow, 4G6M_matched

Let us then superimpose all models onto the reference structure:

Let’s now check if the active residues which we have defined (the paratope and epitope) are actually part of the interface. In the PyMOL command window type:

Are the residues of the paratope and NMR epitope at the interface?

Note: You can turn on and off a model by clicking on its name in the right panel of the PyMOL window.

See the overlay of the top ranked model onto the reference structure expand_more

Top-ranked model of the top cluster (cluster1_model_1) superimposed onto the reference crystal structure (in yellow)

Conclusions

We have demonstrated the usage of HADDOCK3 in an antibody-antigen docking scenario making use of the paratope information on the antibody side (i.e. no prior experimental information, but computational predictions) and an NMR-mapped epitope for the antigen. Compared to the static HADDOCK2.X workflow, the modularity and flexibility of HADDOCK3 allow to customise the docking protocols and to run a deeper analysis of the results. HADDOCK3’s intrinsic flexibility can be used to improve the performance of antibody-antigen modelling compared to the results we presented in our Structure 2020 article and in the related HADDOCK2.4 tutorial.

BONUS 1: Dissecting the interface energetics: what is the impact of a single mutation?

Mutations at the binding interfaces can have widely varying effects on binding affinity - some may be negligible, while others can significantly strengthen or weaken the interaction. Exploring these mutations helps identify critical amino acids for redesigning structurally characterized protein-protein interfaces, which paves the way for developing protein-based therapeutics to deal with a diverse range of diseases. To pinpoint such amino acids positions, the residues across the protein interaction surfaces are either randomly or strategically mutated. Scanning mutations in this manner is experimentally costly. Therefore, computational methods have been developed to estimate the impact of an interfacial mutation on protein-protein interactions. These computational methods come in two main flavours. One involves rigorous free energy calculations, and, while highly accurate, these methods are computationally expensive. The other category includes faster, approximate approaches that predict changes in binding energy using statistical potentials, machine learning, empirical scoring functions etc. Though less precise, these faster methods are practical for large-scale screening and early-stage analysis. In this bonus exercise, we will take a look at two quick ways of estimating the effect of a single mutation in the interface.

PROT-ON and haddock3-scoring to inspect a single mutation

PROT-ON (Structure-based detection of designer mutations in PROTein-protein interface mutatiONs) is a tool and online server that scans all possible interfacial mutations and predicts ΔΔG score by using EvoEF1 (active in both on the web server and stand-alone versions) or FoldX (active only in the stand-alone version) with the aim of finding the most mutable positions. The original publication describing PROT-ON can be found here.

Here we will use PROT-ON to analyse the interface of our antibody-antigen complex. For that, we will use the provided matched reference structure (4G6M-matched.pdb) in which both chains of the antibody have the same chainID (A), which allows us to analyse all interface residues of the antibody at once.

Note: Pre-calculated PROT-ON results for this system can be accessed here.

Connect to the PROT-ON server page (link above) and fill in the following fields:

Specify your run name* –> 4G6M_matched

Choose / Upload your protein complex* –> Select the provided 4G6M-matched.pdb file

Which dimer chains should be analyzed* –> Select chain A for the 1st molecule and B for the 2nd Pick the monomer for mutational scanning* –> Select the first molecule - the antibody (toggle the switch ON under the chain A)

Your run should complete in 5-10 minutes. Once finished, you will be presented with a result page summarising the most depleting (ones that decrease the binding affinity) and most enriching (ones that increase the binding affinity) mutations.

Which possible mutation would you propose to improve the binding affinity of the antibody?

See answer expand_more

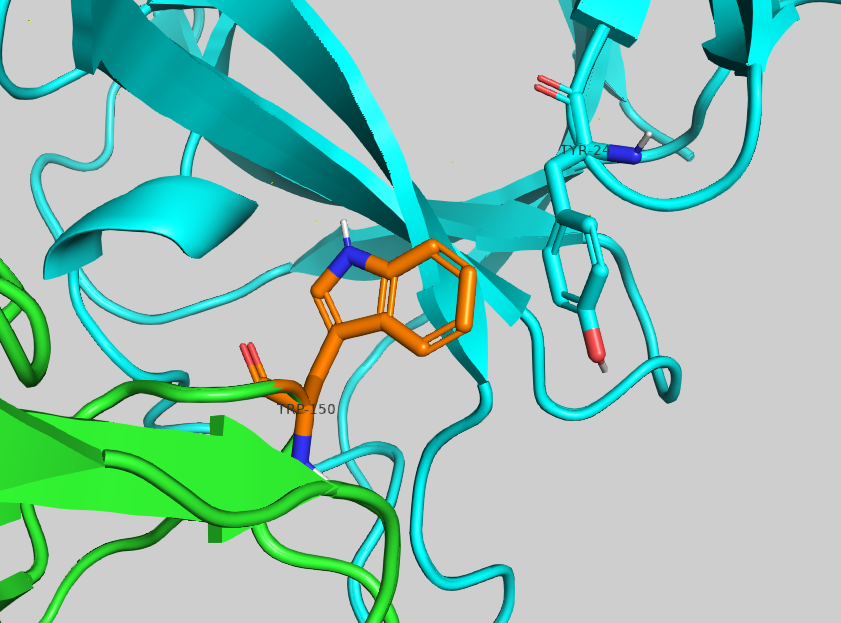

The most enriching mutation is S150W with a -3.69 ΔΔG score.Inspect the proposed amino acid in PyMol. Can you rationalise why it might increase the affinity?

With HADDOCK3, it is possible to take a step further. To perform the mutation, simply rename the desired residue and score such model - HADDOCK will take care of the topology regardless of the side chain differences and energy minimisation of the model. To do so, first either edit 4G6M-matched.pdb in your favourite text editor and save this new file as 4G6M_matched_S150W.pdb, or use the command line: sed ‘s/SER\ A\ 150/TRP\ A\ 150/g’ 4G6M_matched.pdb > 4G6M_matched_S150W.pdb

Next, score the mutant using the command-line tool haddock3-score.

This tool performs a short workflow composed of the topoaa and emscoring modules. Use flag --outputpdb to save energy-minimized model:

haddock3-score 4G6M_matched_S150W.pdb --outputpdb

Zoom in on the mutated residue expand_more

Alanine Scanning module

Another way of exploring interface energetics is by using the alascan module of HADDOCK3. alascan stands for “Alanine Scanning module”.

This module is capable of mutating interface residues to Alanine and calculating the Δ HADDOCK score between the wild-type and mutant, thus providing a measure of the impact of each individual mutation. It is possible to scan all interface residues one by one or limit this scanning to a selected by user set of residues. By default, the mutation to Alanine is performed, as its side chain is just a methyl group, so side chain perturbations are minimal, as well as possible secondary structure changes. It is possible to perform the mutation to any other amino acid type - at your own risk, as such mutations may introduce structural uncertainty.

Important: 1/ alascan calculates the difference between wild-type score vs mutant score, i.e. positive Δscore indicative of the enriched (stronger) binding and negative Δscore is indicative of the depleted (weaker) binding; 2/ Inside alascan, a short energy minimization of an input structure is performed, i.e. there’s no need to include an additional refinement module prior to alascan.

Here is an example of the workflow to scan interface energetics:

# ====================================================================

# Scanning interface residues with haddock3

# ====================================================================

# directory in which the scoring will be done

run_dir = "run-energetics-alascan"

# compute mode

mode = "local"

ncores = 50

# Post-processing to generate statistics and plots

postprocess = true

clean = true

molecules = [

"pdbs/4G6M_matched.pdb",

]

# ====================================================================

# Parameters for each stage are defined below

# ====================================================================

[topoaa]

[alascan]

# mutate each interface residue to Alanine

scan_residue = 'ALA'

# generate plot of delta score and its components per each mutation

plot = true

# ====================================================================A scoring scenario configuration file is provided in the workflows/ directory as interaction-energetics.cfg, and precomputed results are in runs/run-energetics-alascan.

The output folder contains, among others, a directory titled 1_alascan with a file scan_4G6M_matched_haddock.tsv that lists each mutation, corresponding score and individual terms:

########################################################## # `alascan` results for 4G6M_matched_haddock.pdb # # native score = -145.5891 # # z_score is calculated with respect to the other residues ########################################################## chain res ori_resname end_resname score vdw elec desolv bsa delta_score delta_vdw delta_elec delta_desolv delta_bsa z_score A 212 LYS ALA -136.33 -66.16 -367.66 3.37 1660.53 -9.26 2.52 -75.12 3.24 37.57 -0.48 A 103 ASP ALA -129.64 -59.93 -365.23 3.34 1677.97 -15.95 -3.71 -77.56 3.27 20.13 -1.41 A 54 TRP ALA -138.18 -58.34 -435.53 7.27 1690.80 -7.41 -5.30 -7.26 -0.66 7.30 -0.22 A 32 SER ALA -143.66 -60.55 -447.37 6.36 1691.72 -1.93 -3.09 4.59 0.24 6.38 0.55 A 58 ASP ALA -121.65 -63.49 -306.77 3.20 1639.20 -23.94 -0.15 -136.01 3.41 58.90 -2.52 A 33 GLY ALA -148.50 -61.56 -473.22 7.71 1693.18 2.91 -2.08 30.43 -1.10 4.92 1.22 ...

You can use an additional script /scripts/get-alascan-extrema.sh to check your answer:

bash scripts/get-alascan-extrema.sh run-energetics-alascan/1_alascan/scan_4G6M_matched_haddock.tsv

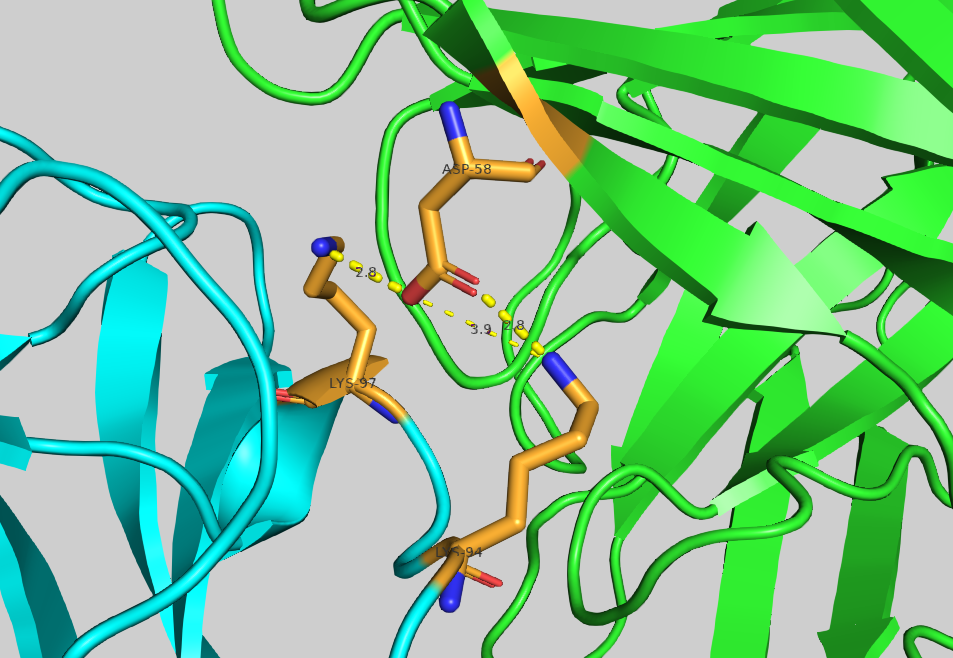

Mutation of the residue ASP58 turned out to be the most depleting within chain A. Let us visualise it in PyMol to analyse its contribution to the binding: File menu -> Open -> 4G6M_matched.pdb

Display ASP58 as sticks and colour it by atom:

util.cbc

select asp58, (resi 58 and chain A)

show sticks, asp58

util.cbao asp58

Now visualise its neighbouring residues:

select asp58_neighbour_atoms_4A, (resi 58 and chain A) around 4 and chain B

select asp58_neighbour_residues, byres asp58_neighbour_atoms_4A

show sticks, asp58_neighbour_residues

util.cbao asp58_neighbour_residues

Let us display contacts using show contacts plugin:

We can see one hydrogen bond between ASP58 and LYS98, and two hydrogen bonds ASP58 and LYS94.

Mutating ASP58 to ALA should result in the disappearance of those h-bonds, and the overall depletion of the binding.

This is reflected by the high negative value (-136.01) of delta_elec in either of .tsv files.

Let us test several mutations to confirm our hypothesis. Here is an example of the workflow to perform such mutations and save generated models:

# ====================================================================

# Mutating selected interface residue with haddock3

# ====================================================================

# directory in which the scoring will be done

run_dir = "run-energetics-mutations"

# compute mode

mode = "local"

ncores = 50

# Post-processing to generate statistics and plots

postprocess = true

clean = true

molecules = ["pdbs/4G6M_matched.pdb"]

# ====================================================================

# Parameters for each stage are defined below

# ====================================================================

[topoaa]

[alascan]

# mutate residue 58 of chain A to Arginine

scan_residue = "ARG"

resdic_A = [58]

# save energy-minimised mutant model

output_mutants= true

[alascan]

scan_residue = "GLY"

resdic_A = [58]

output_mutants= true

[alascan]

scan_residue = "TRP"

resdic_A = [58]

output_mutants= true Configuration file for this scenario can be found in workflows/single-residue-mutations.cfg, precomputed results are in run-residue-mutations. The output folder contains, among others, an energy-minimised mutant model 1_alascan/4G6M_matched_haddock-A_D58R.pdb.gz, and tables .tsv with energetics.

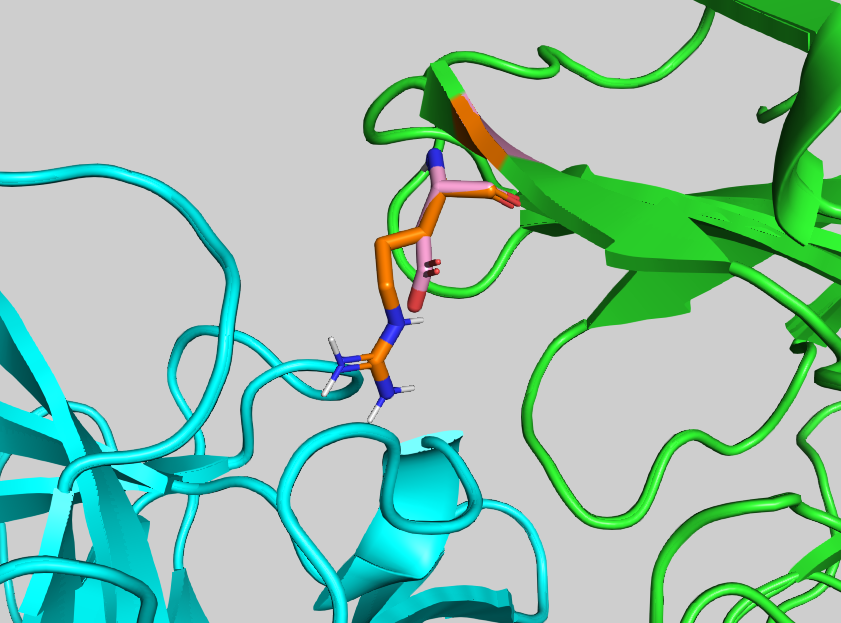

Take a look at the scores of the mutants. Which mutation depletes binding the most?

Inspect the mutant vs wild-type complex. Can you see the difference at the interface level?

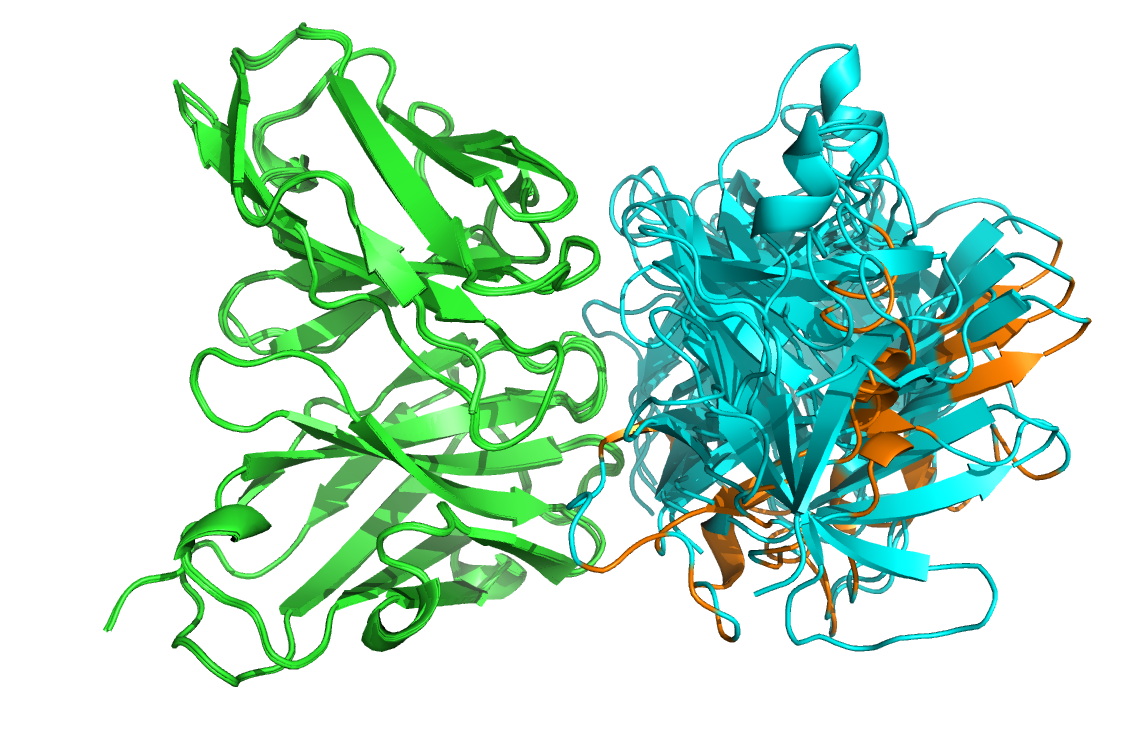

See the overlay of the mutant onto the wild-type structure expand_more

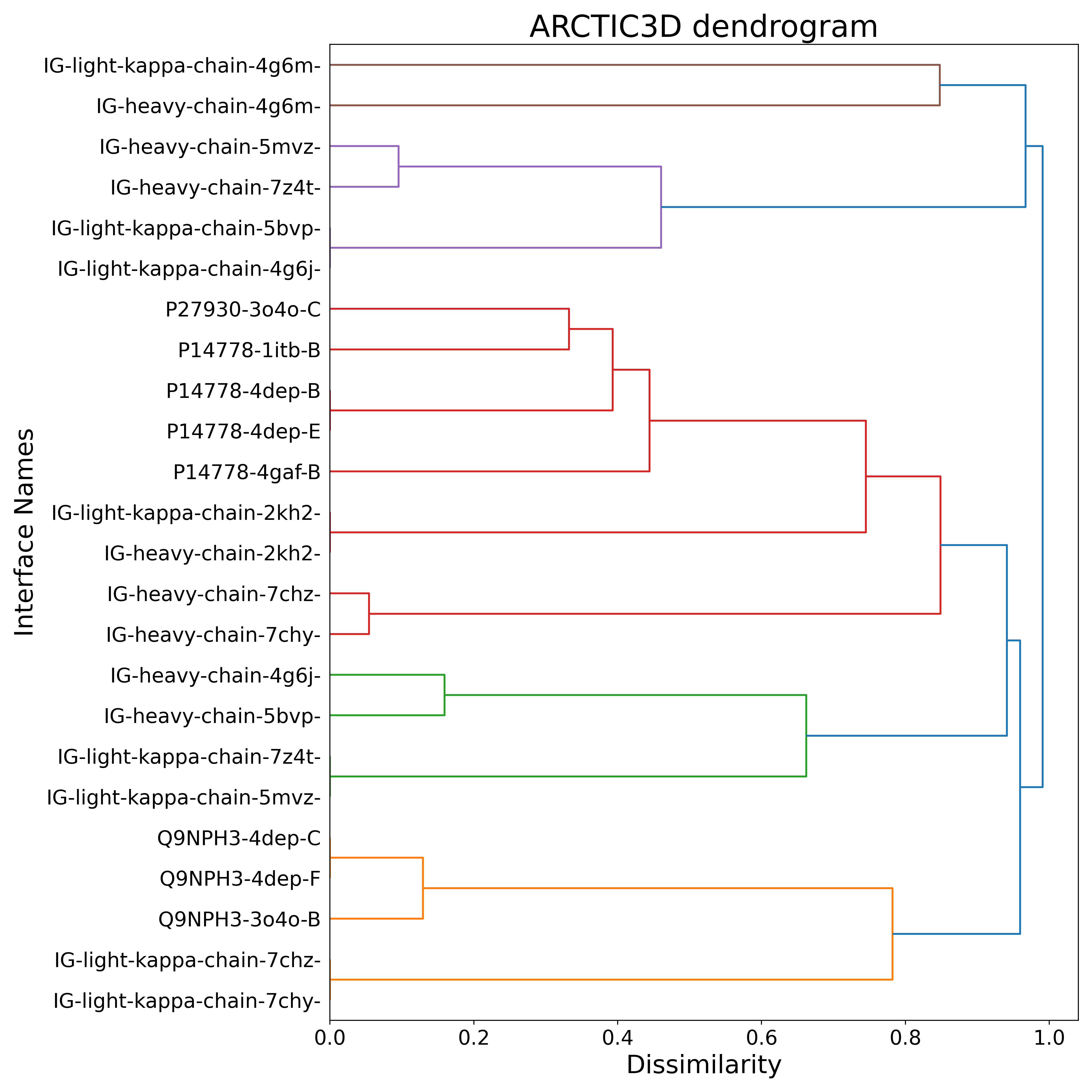

See answer expand_more